Signia: Pioneers in Machine Learning Hearing Aids

Matthias Froehlich, Ph.D. & Thomas A. Powers, Ph.D.

A brief chronology of what has happened with machine learning in the world of “games.” It was in 1952 that Arthur Samuel wrote the first computer learning program. The program dealt with playing the game of checkers.

Samuel’s work revealed that an IBM computer improved at the game the more it played, studying which moves made up winning strategies and incorporating those moves into its program. Forty-five years later, the game was chess, and IBM’s Deep Blue beat Garry Kasparov, the world’s champion at the time. And, in 2011, IBM’s Watson beat its human competitors on the popular television game show Jeopardy.

Machine learning of course is much more than playing games. It is used in industry, the military, healthcare, social media, and yes, even hearing aids. There is good reason why machine learning is a popular buzzword, as it is rapidly changing how we interact with computers—including the miniature ones housed in Signia hearing instruments. Basically, machine learning means that a learning algorithm is given data for teaching, and then asked to use those data to make decisions. More and more data are provided, which are then added to the teaching set, which in turn improves accuracy. In essence, the machine is learning. This could be related to recognizing a photo, a person’s voice, learning how to talk with a human voice, or learning to drive a car.

It’s debatable whether a computer will ever “think” in the same way that the human brain does, but machines’ abilities to see, understand, and interact are changing our lives. It is no surprise then, that in the past few years we have seen mention of machine learning in hearing aid trade journal articles. But are machine learning hearing aids really something new?

The first commercially available machine learning hearing aid was introduced 12 years ago in 2006 by Siemens/Signia—the Model Centra.1 These hearing instruments had trainable gain. That is, the hearing aid would “learn” from the user’s VC (or remote control) adjustments what gain was preferred. This training effect did not alter the maximum or minimum gain of the instruments, but rather, the start-up gain when the hearing aids were first turned on. In these instruments, all VC changes were averaged, with greatest emphasis on the most recent changes.

The physical time constant for learning was defined as the time required by exponential averaging to reach 65% of steady state (optimal gain). For most users, this target was typically reached after one or two weeks of hearing aid use, with most learning occurring in the first week. Independent gain learning was possible for different programs/memories, so that preferences for listening in noise, listening to music, etc, also could be automatically trained.

Research with this first machine learning hearing aid was very favorable. While all individuals typically started with a fitting to prescriptive targets, the learning of the Centra allowed for expected deviations from average and personal preferences to be accounted for. Moreover, this machine learning took place in the user’s daily listening conditions, not in a clinic or office, providing the much-needed real-world effect. This allowed for different learning for different listening environments. Research with the Centra also revealed that the gain starting point for the training can influence the final trained gain, which reinforced the use of a validated prescriptive approach as a starting point.2

As mentioned, with the revolutionary Centra product, it was possible to train gain, and to conduct this training for different programs—this was referred to as DataLearning. One limitation, however, was that the training was for overall gain. As reviewed by Mueller et al,2 it would be possible that for a given patient, average gain initially was “just right,” but if loud sounds were too loud, the patient might train down overall gain, and as a result, average inputs would then be too soft.

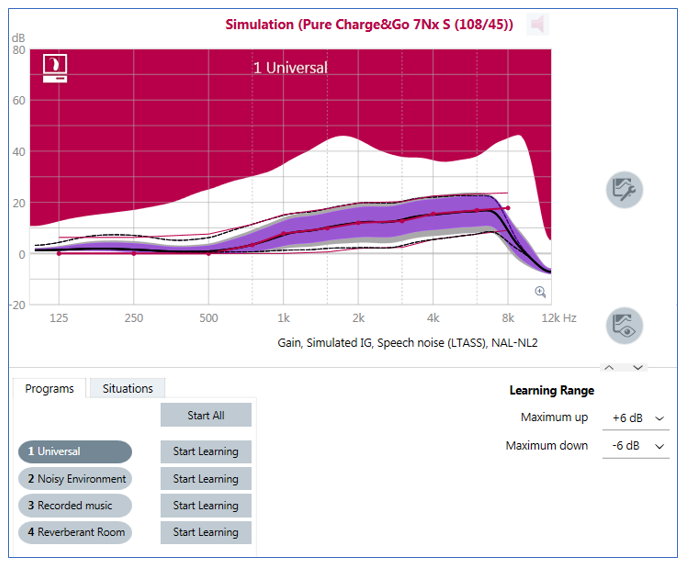

This potential limitation was soon corrected, as in 2008 the Siemens/Signia Pure hearing aid was introduced, which had advanced machine learning dubbed SoundLearning.3 SoundLearning optimized level-dependent gain in four frequency bands independently. In other words, it was compression learning; independent frequency-specific learning for different input levels. Through this learning, the hearing aid would map an input-dependent output function for a given individual based on that listener’s preferred loudness for different input levels; it also would interpolate missing data. For example, if a hearing aid user preferred gain of 20 dB (trained via VC adjustment) for music of 60 dB SPL, and gain of 10 dB (trained via VC adjustment) for music of 70 dB SPL, the learning would automatically apply 15 dB gain for music at 65 dB SPL, without any adjustment by the user.

Because compression learning occurred independently in multiple bands, it was also able to learn level-specific frequency shapes. Hence, SoundLearning simultaneously optimized loudness and frequency shape. Another advancement in this generation of SoundLearning was that in addition to volume control adjustments, the frequency shape could be trained by SoundBalance, which the user could adjust using the rocker switch on the hearing aid or a remote control.

Research with this new machine learning illustrated the advantage of compression training. For example, Palmer4 found that on average, new hearing aid users, when fitted according to the NAL prescriptive method, tended to train average inputs to be very close to the initial fitting, but trained soft sounds down a few dB. Mueller and Hornsby5 fitted these instruments to individuals who were experienced users, who had been using hearing aids fitted considerably below NAL targets (e.g., ~10 dB for soft speech, ~5 dB for average speech). Following a few weeks of learning, on average, the individuals trained the hearing aids to within 2-3 dB of prescriptive gain for soft, and within 1 dB of prescriptive gain for average, again illustrating the benefits of input-level-specific learning.

The next advancement in Siemens/Signia machine learning was related to different listening situations. As mentioned, training always was possible for different programs, but this meant that the user had to switch to a specific program to obtain the benefit. In 2010, advancement led to the link between compression learning and automatic signal classification. That is, as a given signal was classified into one of six distinct categories, the used gain, or gain adjustments made by the patient automatically generated training within that specific categorization. This learning would then continue automatically whenever that categorization would occur, and all six categories would be trained independent of each other. This meant that the user no longer had to change programs to take advantage of the machine learned settings.

Considerable research with this learning algorithm was conducted at the Australian National Acoustic Laboratories.6 Their findings revealed that after a few weeks of hearing aid use, the learning was individualized for each of the six conditions, illustrating differences in preferences for such diverse situations as speech in background noise, listening to music, or communication in a car.

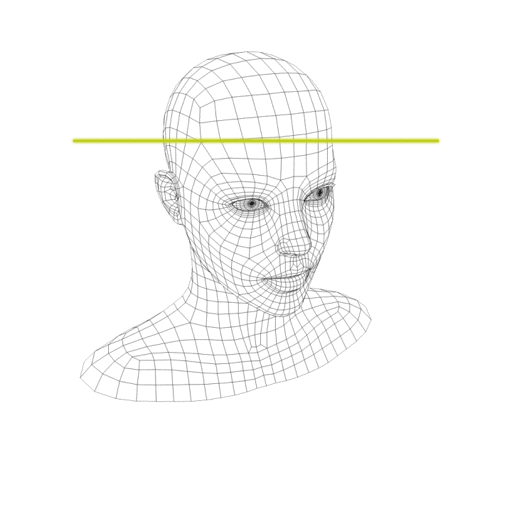

The machine learning advancements continue. Up to this point, we’ve been talking about learning that might not be complete until after a week or two of hearing aid use. In 2017, Signia introduced a new machine learning algorithm that only takes a few seconds. This unique learning algorithm was labeled Own Voice Processing (OVP)—but importantly, it’s not just processing, it is also voice recognition.

As reported by Hoydal,7 OVP is accomplished by training the hearing aids to recognize the wearer’s voice, which requires about 10 seconds of live speech from the user while wearing the hearing aids. Using bilateral full-audio data sharing (based on the “ultra HD e2e” wireless communication protocol), the hearing aids “scan” the acoustic path of their own placement relative to the wearer’s mouth. Based on this analysis, the hearing aids are able to detect when speech is coming from the wearer versus his/her surroundings, even if speech is coming from a conversation partner situated directly in front of the wearer. Additionally, if the wearer’s voice changes for some reason, the detection remains precise, because it scans the unique sound propagation pattern as sound travels from the wearer’s mouth to the hearing aid microphones, rather than the frequency characteristics of the wearer’s voice.

Research with this new machine learning algorithm has clearly shown the benefits. Satisfaction with the sound of one’s own voice increases substantially, even when fitted with closed ear canal couplings.8 Likewise, hearing aid users report that the sound of their voice is significantly more natural when OVP is activated.9 And, perhaps most importantly, when OVP is used, new hearing aid users report that they are more engaged in conversations.10

Artificial Intelligence, deep learning and machine learning are terms that we will continue to hear more and more, including in the world of hearing aids. Since 2006, Signia has employed machine learning in its products, enhancing the automatic function of these instruments, and as a result, providing greater patient benefit and satisfaction. The recent success of own voice recognition and processing provides a glimpse of what the future of hearing aid machine learning has in store. Stay tuned!

Dr. Matthias Froehlich is global audiology strategy expert for WS Audiology in Erlangen Germany. He is responsible for the definition and validation of the audiological benefit of new hearing instrument platforms. Dr. Froehlich joined WS Audiology (then Siemens Audiology Group) in 2002, holding various positions in R&D, Product Management, and Marketing since then. He received his Ph.D. in Physics from Goettingen University, Germany.

Thomas A. Powers, Ph.D., is the Managing Member of Powers Consulting, LLC, providing management consulting to the hearing health industry. Dr. Powers serves an expert audiology consultant for the Better Hearing Institute, and as a Senior Consultant for Sivantos, Inc. Dr. Powers received his B.S. from the State University of New York at Geneseo, and his M.A. and Ph.D. in Audiology from Ohio University. He began his career as a partner in an Audiology private practice, and has over 35 years of experience in the hearing health care industry. Prior to his current role, Dr. Powers was Vice-President, Government Services and Professional Relations for Sivantos, Inc.