The new way: Ensuring consistent support and individualized care through the Signia Assistant

Erik Harry Høydal, MSc. Rosa-Linde Fischer, PhD Vera Wolf, Dipl.- Ing.(FH) Eric Branda, AuD, PhD Marc Aubreville Dipl.-Ing. System Architect

It is well recognized that the starting point for determining appropriate frequency-specific gain and output for a hearing aid wearer is the use of a validated prescriptive fitting approach. It is also well known, that for most wearers, fine-tuning is necessary — either on the day of the fitting, and/or following realworld listening experiences.

Since the early development of behindtheear devices (mid-1950s), the ability to adjust the frequency response of a hearing aid as possible through the use of a screwdriver-controlled potentiometer. In the late 1980s, digitally programmable hearing aids became available, which created a quantum leap in the ability for the hearing care professional (HCP) to individualize the fitting. The degree of adjustability increased even more a decade later, when digital hearing aids were introduced.

Along with the increased ability for the HCP to make precise adjustments across different hearing aid parameters came the increased responsibility to “get it right” and fulfil the expectation of optimization from hearing aid wearers. The adjustment solution, however, to a user complaint is not always straightforward. When the HCP hears from the wearer that “Things are just too loud,” does he or she change overall gain, the AGCi kneepoints, or the AGCo kneepoints? And at what frequencies?

To provide some organization to this new world of hearing aid adjustability, Jenstad, Van Tasell and Ewert [1] conducted a survey of clinical audiologists and developed a vocabulary of 40 different terms that patients use to describe their fine-tuning needs. In a follow-up survey, the authors asked 24 “expert” audiologists to describe their method of adjusting the hearing aid fitting when the patient’s complaint was one of the frequently reported terms. There was a high degree of agreement among the experts, providing a starting point for an expert system for fine-tuning hearing aid fittings. Based in part on the findings of Jenstad et al [1], Siemens developed a Fitting Assistant as part of the Connexx fitting software. Based on specific complaints from the wearer,

changes to the programming were suggested in the software, which then could be implemented by the HCP if desired.

While today, on a post-fitting visit, the HCP has the ability to make many different changes to many different hearing aid parameters, and expert trouble-shooting guides are available, questions remain regarding the efficiency and validity of this time-honored approach.

There are several limitations to the finetuning process. But fortunately, solutions are now available. In recent years, researchers have shown the advantage of what is termed ecological momentary assessment (EMA) to evaluate real-world hearing aid performance. As has been reported in TeleCare publications [3,4] it is possible, through the use of smart phone apps, to utilize EMA in routine clinical practice. When in a given listening situation, the wearer opens the app, the hearing aid retrieves the acoustic information and the hearing aid settings at that time. The wearer no longer needs to “remember” the conditions when a given problem occurs. The next step is then to guide the wearer to provide specific information regarding the problem, and a

programming change automatically is made. The wearer then notes if the problem is solved. If not, the process continues until hopefully the problem is solved. What we have just described in brief is the new Signia Assistant.

We mentioned earlier that, with modern hearing aids, there are many adjustments possible, with many features interacting with other features. This is recognized by the Signia Assistant by using Artificial intelligence to learn the customer’s individual preferences [6]. Moreover, it adapts its built-in knowledge about what solutions work best for most customers.

You hear a lot about Artificial Intelligence or AI in today’s tech world. Machine learning, which is an essential part of AI, has been around for a long time.

The advancements of AI truly shine when we look at Artificial Neural Networks, strongly inspired by how the human brain works. Our brain is composed by a vast number of cells called neurons. Our ability to learn comes from the fact that these neurons can communicate between each other and create new pathways, enabling us to gain new skills.

While, previously, machine learning approaches were often created once, and aimed to replicate a certain behavior, current approaches are much more dynamic. The individual persons hearing aid is set to match an average target and while, on average, those fixed values are a good choice, individually there can be strong differences. Data-driven approaches recognize that there might be more knowledge than we can currently grasp. And neural network approaches can be quickly adapted to reflect newly acquired knowledge. These are all the behind-thescenes activities of the Signia Assistant.

In the vast majority of situations, the universal hearing program is used by hearing aid wearers — consequentially, fine-tuning by the expert will also often focus on this hearing program. This motivates why the Signia Assistant also provides modifications for this program – to ensure the patient benefits from his finetuning in a high number of daily situations. All settings that are touched are part of adaptive features — be it Own Voice Processing (OVP), directionality and noise reduction or compression. As a result, this combines a situation-awareness that is already built into these algorithms with seamless transitions for an individualized

but stable sound perception.

Let’s say a wearer tells the Assistant that he or she would like to hear their conversation partner better. A possible solution for this situation could include changes in gain, compression or also directionality and noise reduction. The best solution, however, is highly individual, and might depend on the individual’s hearing loss, cognitive capabilities, or the sound shape preferences. Furthermore, it is highly dependent on the situation which is certainly a lot different to the situation in which the hearing care professional fitted the devices. This complex, multidimensional dependency is hard to put into easy rules – luckily, this is where artificial neural networks can help us [6].

The option is to create a system of what we think is the most likely best solution. This gives a great basis, but it also means that

the Signia Assistant has room to explore different solutions and learn if something else potentially works even better for the

individual.

If it does not, the first — most obvious — solution will gain strength in future decision making. If, however, the alternative solution proves to be even better over time, for many wearers, it climbs the hierarchy and becomes more likely to be used by the Signia Assistant in future similar situations.

The other aspect is that the neural network of the Signia Assistant can identify that some hearing aid wearers have certain characteristics, that increases likelihood for a certain solution. Things like previous hearing aid experience, certain hearing loss configurations, or sensitivity for noise, are all example of such characteristics. A group of users with a set of common attributes will then potentially be given other changes to their hearing aid settings than a different group.

Additionally, the Signia Assistant remembers the individual’s previous preferences and will use that as an added element for which solutions work best for that individual.

This way, two people in the same noisy restaurant, will get the solution the neural network has identified as best, specifically for them. Perhaps the best way to understand the Signia Assistant is to walk through how it is actually used in a real-world setting [7]: our hearing aid wearer takes out his smart phone, opens the Signia Assistant and the conversation starts:

![Introduction This way, two people in the same noisy restaurant, will get the solution the neural network has identified as best, specifically for them. Perhaps the best way to understand the Signia Assistant is to walk through how it is actually used in a real-world setting [7]: our hearing aid wearer takes out his smart phone, opens the Signia Assistant and the conversation starts:](/wp-content/uploads/sites/137/2020/05/Signia-assistant-dialog_500px.jpg)

A validation study of the Signia Assistant was conducted at the WS Audiology ALOHA (Audiology Lab for Optimization of Hearing

Aids), in Piscataway, NJ. Participants were 15 individuals with bilateral sensorineural hearing loss; all were experienced hearing aid users. There were 7 males and 8 females; the average age was 61 (range 33- 78 years). Their symmetrical downward sloping mean audiogram ranged from 30- 40 dB in the low frequencies to 60 dB for 4000 Hz. As part of the recruitment process, all participants owned and were able to operate a smart phone.

Using the fitting software Connexx 9.2, the subjects were fitted bilaterally to Signia Xperience Pure7X RIC hearing aids. Click sleeve fitting tips were selected that were appropriate for the individual’s hearing loss. The hearing aids were fitted to the NAL-NL2 prescriptive method, verified with probe-microphone measurements. Hearing aid special features were set to default parameters. OVP was trained and activated. All settings were copied to a second program, which would later be used to verify the user changes made in the home trial.

With the participants aided bilaterally, speech recognition testing was conducted in an audiometric test suite. For recognition in quiet, the speech material was the Auditec recording of the NU-6 monosyllabic word lists presented at 55 dB SPL. A second speech recognition measure was the American English Matrix Test (AEMT). The AEMT is an adaptive sentence test used with a competing speech noise, which was presented at 65 dB SPL. SRT80 was used as the test criterion (the SNR where participants repeated 80% of the words of the sentences correctly). For both speech measures, the target speech was presented from a 0-degree azimuth; for the AEMT, the competing speech noise was delivered from 180 degree.

Following the initial lab testing the participants were transferred to an independent usability resources research group, where they were instructed on the use of the Signia Assistant app. After successful training on the app, the participants used the hearing aids in a 10-14-day home trial. They were instructed to use the app on demand in their daily life and were given a list of specific listening situations outside of home to experience. During the week, the participants were contacted to ensure that they were using the app, were taking part in the requested listening experiences, understood the operation, and were requested to answer the System Usability Scale by Brooke [8] to rate the user friendliness of the app.

Following the home trial period, the participants returned to the ALOHA, at which time speech recognition testing was again conducted. For this visit, speech in quiet and the AEMT were administered for both the original NAL-NL2 programmed settings (stored in the 2nd program) and for the fitting that resulted from the use of the Signia Assistant (1st program “Universal”). The participants also completed a questionnaire on their experiences regarding benefit and satisfaction of Signia Assistant´s use.

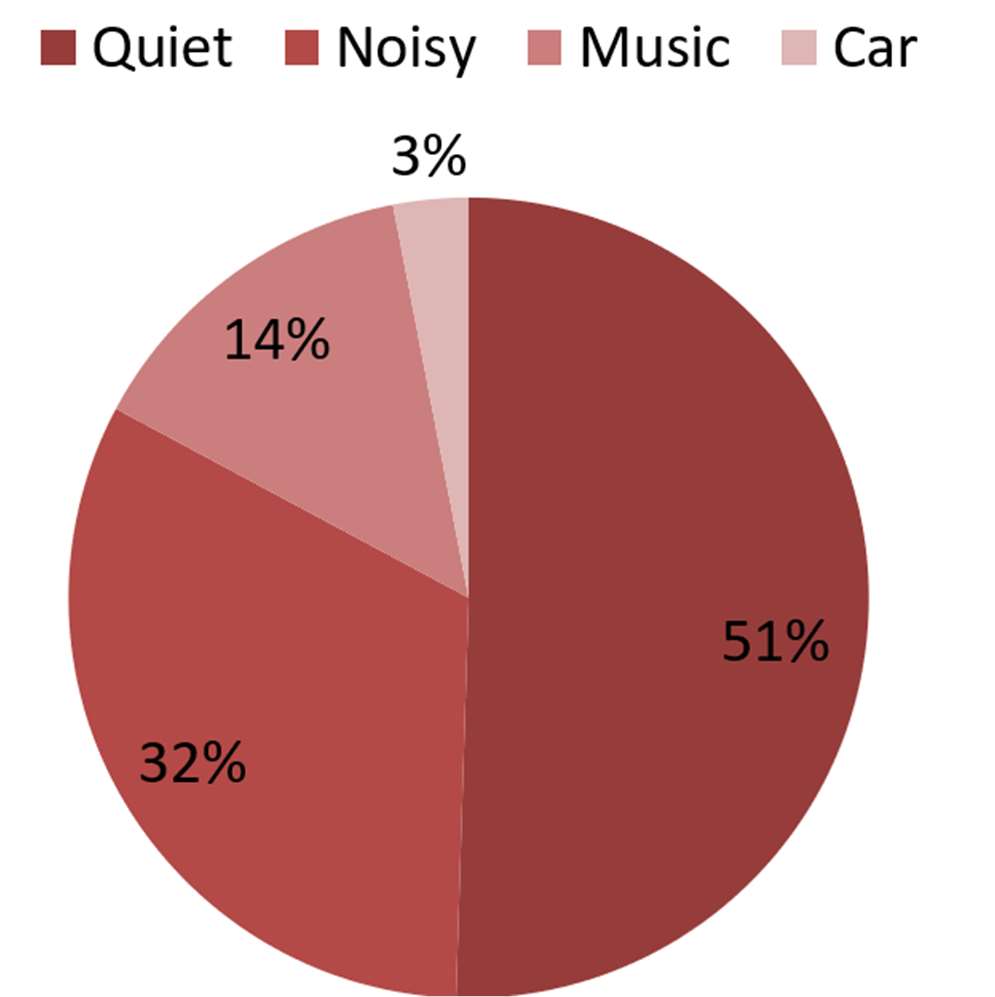

At the time of the fitting, for research purposes, the app for each participant was coded, so that use data could be retrieved at the conclusion of the study (Note: For normal use of the Signia Assistant, all data are 100% anonymized to ensure full data privacy). These data revealed that the average participant used the hearing aids for 147 hours during the trial period. The most common environment was quiet followed by noisy, listening to music and in a car (see Figure 2). During the trial period, the Assistant was used a total of 266 times, an average of 18/participant. The most common reason for using the Assistant was Sound Quality (62%), followed by Other Voices (25%) and Own Voice (13%). Recall that all participants were fitted and trained with Own Voice Processing, which is designed for an improved perception of own voice, and obviously works quite well.

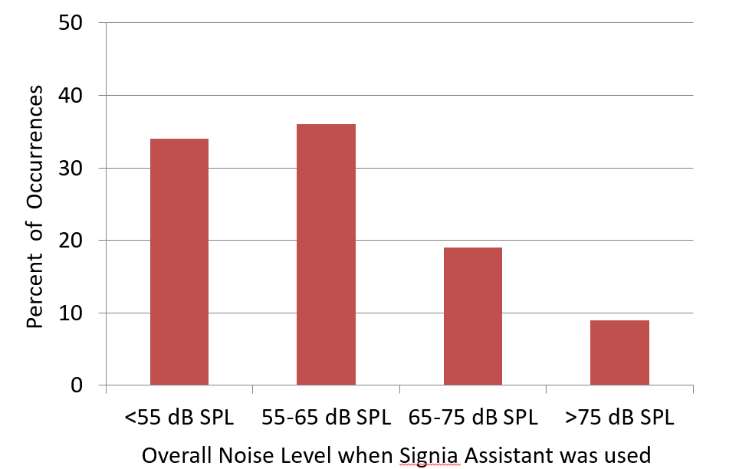

The decision making of the Signia Assistant is driven in part by the overall noise level for the situation when the user accessed the app. The distribution of the level of the background noise that was present at the time of the 266 events is shown in Figure 3. Observe that in 2/3 of the cases, the Assistant was used when the overall SPL was 65 dB or less. This is consistent with the finding that 50% of the events were for quiet (see Figure 2).

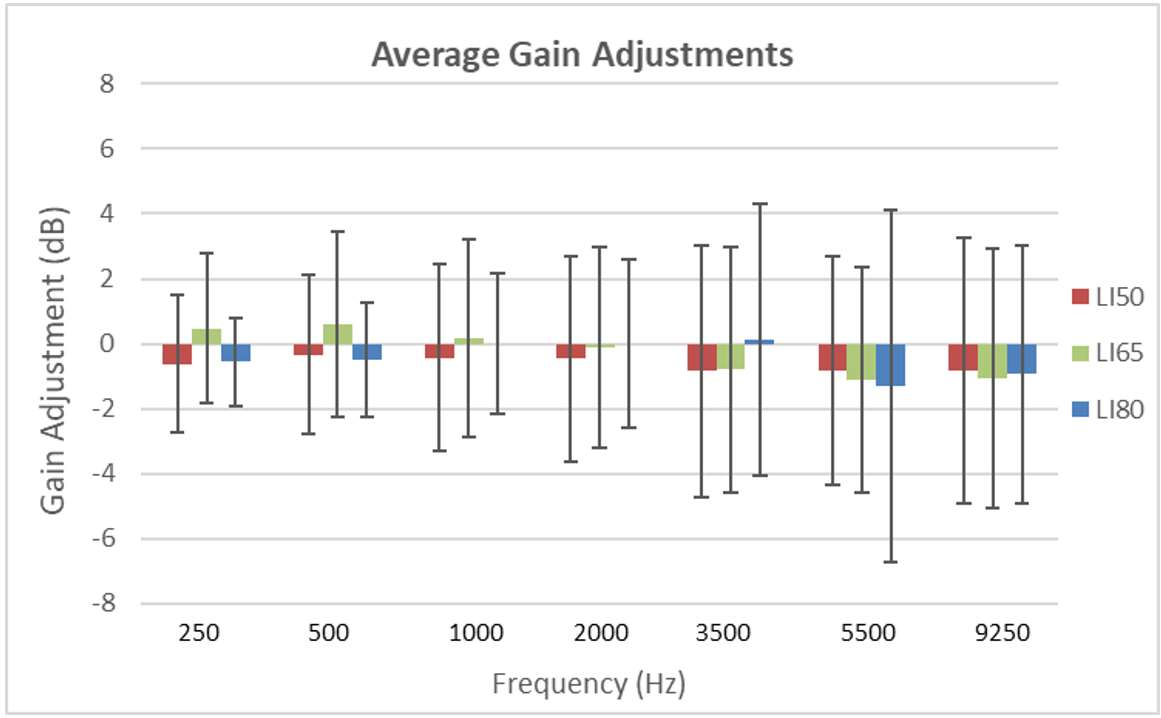

It was of particular interest to examine what gain changes resulted from the use of the Signia Assistant. There is always some concern that following a verified fitting by an HCP, if the patient is given control, perhaps unreasonable changes will be made. This was not the case. Shown in Figure 4 are the changes in gain for 65 dB SPL input that were present at the end of the home trial (recall that all participants were originally fitted to the NAL-NL2 targets). It could be observed that the wearers preferred very different sounds and adapted their hearing aid settings in both directions, some decreased, and others increased amplification and changed to more or less compressive processing (see Figure 4).

The means of the individual data revealed values that were 1-2 dB below the NAL-NL2 and resulted in a mildly changed compression scheme in the low to mid frequencies. This is consistent with findings with trainable hearing aids, when the original fitting was the NAL-NL2 (Keidser et al [9]).

Dynamic Soundscape Processing steers the automatic behavior of noise reduction and directionality algorithms and the preferred strength is also assumed as highly individual. This is reflected in the wide spread of the resulting settings from the Signia Assistant´s use, from slight decrease towards the default setting to a strong increase (see Figure 5).

![The means of the individual data revealed values that were 1-2 dB below the NAL-NL2 and resulted in a mildly changed compression scheme in the low to mid frequencies. This is consistent with findings with trainable hearing aids, when the original fitting was the NAL-NL2 (Keidser et al [9]).](/wp-content/uploads/sites/137/2020/05/Signia-Assistant_Changes-in-OVP-and-DSP.jpg)

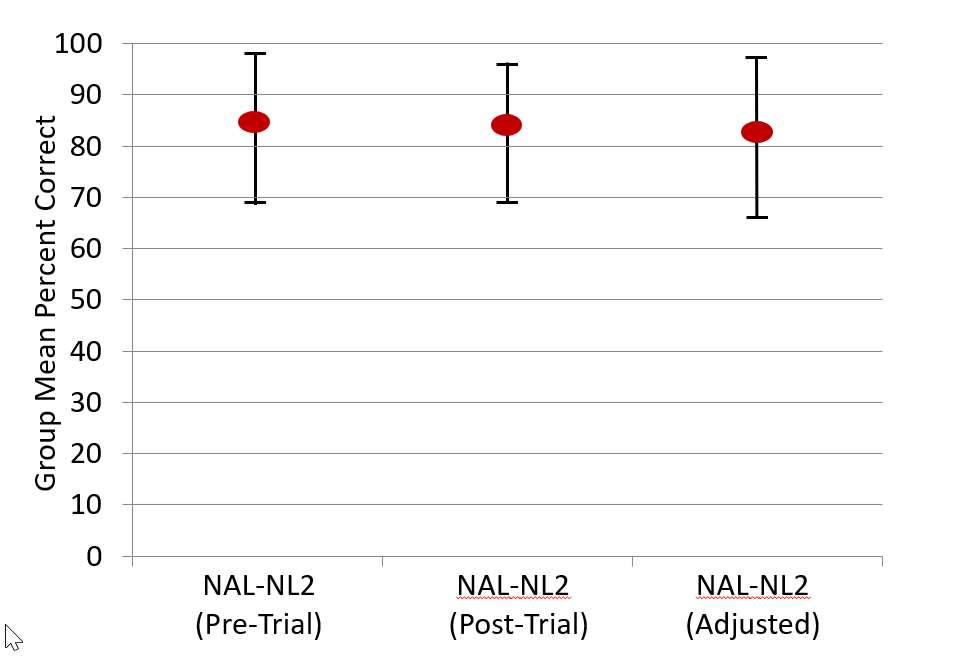

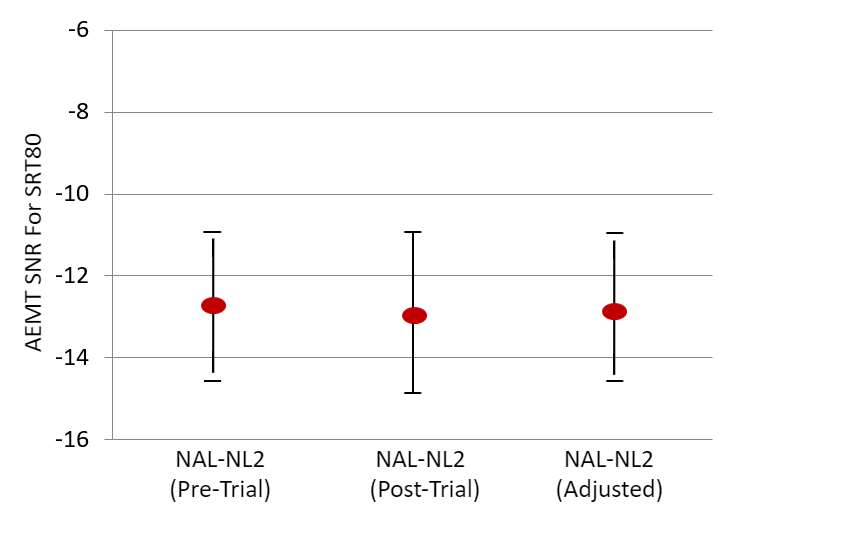

Following the home trial, speech recognition was repeated for both the NU-6 (in quiet) and the AEMT, for the original NAL-NL2 fitting, and the fitting that resulted from the use of the Signia Assistant. The order of testing was counterbalanced. The results for speech recognition in quiet are shown in Figure 6. Included are the findings from tests with the NAL-NL2 programming that was obtained on the day of the fitting (before the home trial). A presentation level slightlysofter-than-normal (55 dB SPL) was purposely selected, so that important changes in audibility would be detected. The data revealed no significant difference among the three test conditions (p>.05). That is, this speech test suggests that the Signia Assistant sustained recognition performance.

The results of the AEMT are shown in Figure 7. As with the speech-in-quiet testing, no significant differences between test conditions were observed (p>.05).

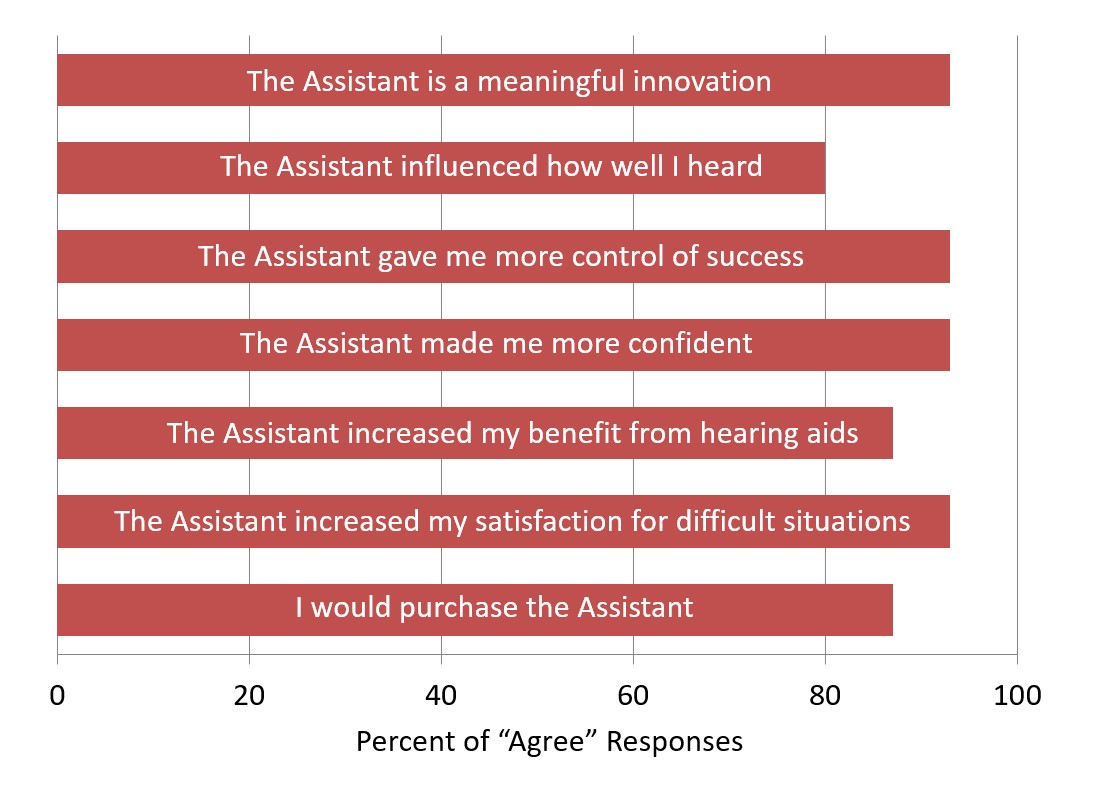

Regarding the user experience of the Signia Assistant rated after seven days of use, the results were very positive. On a five-point scale (Strongly Agree to Strongly Disagree), 80% agreed that they had confidence in the system, and 73% agreed that they would like to use it frequently, that is was not too complex, and that it was easy to use. Only 7% believed that it was too complex and difficult to use, and only 14% believed that they would need technical assistance. The eventually perceived benefit and satisfaction were rated on a 7-point scale (Strongly Agree vs. Strongly Disagree, with a mid-point rating of Neutral) in the final appointment. Figure 8 displays the findings for these questions; the summed percentages of the three “agree” labels (a rating of 5, 6 or 7). As shown, all are very positive findings, with many at 93% agreement, and no more than 7% fell into the “disagree” category for any item. When asked a final question, if they would recommend the Signia Assistant to a friend, on a 1-10 scale (1=Strong No; 10=Strong Yes), all participants gave a rating of at least 5, and 73% gave a rating of 8 or higher. It is interesting to note that 80% of participants reported that the Signia Assistant improved how well they heard, and 93% stated that using the Assistant improved their satisfaction for listening in difficult situation. Recall that this was not revealed in the speech testing and goes back to our earlier discussion regarding the value of EMA. An important, but difficult listening situation for one individual might be an SNR of +2 dB, and for a another, it might be +10 dB.

These findings also show that individuals can make changes that improve their hearing for a variety of situations, and yet not reduce their performance for standardized speech tests.

All in all, the Signia Assistant with its neural network will ensure that the end-user always gets the best possible solution for any given situation, always tailored to their specific needs and preferences. It marks an important step in going from assumption based to data-driven knowledge and moving away from a one-size-fits-all approach to precisely tuned hearing for every individual.

The results of this research clearly show that the people liked and were able to handle their fine-tuning demands with the Signia Assistant. They changed their hearing aid settings in a reasonable way for improved satisfaction in difficult hearing situations without compromising speech intelligibility measures.

Reducing the amount of follow up appointments is linked to higher satisfaction. This study indicates that the Signia Assistant can be a great tool for achieving this. The subjects reported increased satisfaction for how well they heard in general, but also specifically for difficult listening situations. This indicates that the Assistant provided solutions that helped them instantly in those situations hard to replicate in an office, or to describe in retrospect.

We found a high increase in confidence through empowerment among the participants as they felt more in control of their hearing success. This is also reflected in the fact that most said they would look for such an assistant in their next purchase.

This tool is also a great benefit to the hearing care professional. Both as an extended arm to the wearer outside the clinic, but also as a tool for insights into the real-world experience of the wearer.

Erik Harry B. Høydal has been with WS Audiology since 2014. Besides his audiological clinical background, Erik has been involved in research on musicians and tinnitus. He did his MSc in Clinical Health Science at the Norwegian University of Science and Technology (NTNU), and has also worked as a teacher at the Program for Audiology in Trondheim. Høydal has worked politically with awareness of hearing impairment through his engagement in The Norwegian Association of Audiologists. Having joined WS Audiology in Erlangen 2016 his work includes scientific research and portfolio management.

Rosa-Linde Fischer is a scientific audiologist at Sivantos GmbH. She specializes in research and optimization of hearing aid algorithms and gain prescriptions. She received her Ph.D. in Psychoacoustics from the Friedrich-Alexander-University of Erlangen-Nürnberg, Germany. Prior to joining Sivantos in 2011, Dr. Fischer worked as a clinical audiologist.

Vera Wolf is an Audiological Engineer for Sivantos, the manufacturer of Signia hearing aids. She is responsible for feature optimization within the audiology system development team. Mrs. Wolf holds a diploma in Engineering for Audiology and Hearing Technology from the University of Applied Sciences in Oldenburg, Germany. Prior to joining Sivantos (formerly Siemens Audiology), she worked as a clinical pediatric audiologist at the University Clinic in Mainz, Germany.

Dr. Eric Branda is an Audiologist and Director of Research Audiology for Sivantos in the USA. For over 20 years, Eric has provided audiological, technical and product training support around the globe. He specializes in bringing new product innovations to market, helping Sivantos fulfill its goal of creating advanced hearing solutions for all types and degrees of hearing loss. Dr. Branda received his PhD from Salus University, his AuD from the Arizona School of Health Sciences and his Master’s degree in Audiology from the University of Akron.

Marc Aubreville is an engineer who received his M. Sc. (Dipl.-Ing.) from Karlsruhe Institute of Technology in 2009, shortly before joining Sivantos as a signal processing engineer. After seven years of developing directional processing algorithms, automatic steering and binaural signal processing algorithms, he became a system architect in 2017. At the same time, he started a PhD in part-time at Friedrich-Alexander-Universität Erlangen-Nürnberg in the field of artificial-intelligence-based computer-aided tumor diagnostics, which he finished in 2020.