Signia Assistant Backgrounder

Erik Harry Høydal, Marc Aubreville

Hearing aids have gone through tremendous development in the last 20 years. With more and more precise speech enhancement and advanced sound processing, they are now able to provide speech understanding and excellent sound quality in almost every situation.

Signia Xperience, the most powerful hearing aid platform to date, can even precisely understand where the wearer is and how they interact within that situation.

One thing that has not changed though, is how hearing aids are being fitted and optimized for the individual wearer. An audiogram has been the sole basis of the fitting, and wearer summaries after trial have been the only input that hearing care professionals (HCP) have had for fine adjustment. Up until now.

An audiogram only tells us the audible threshold for pure tones, it does not take the individual’s acceptance level of noise or preferences to sound into account at all. We know there’s a huge variety of how sensitive people are to sounds and how much support they need in different situations. The only way to get this information so far, has been asking the wearer how they have heard the last few weeks, and which problems occurred. For an end-user, it’s extremely hard to answer these questions in such a manner that the HCP can directly translate that into an adjustment in the hearing aid which precisely accommodates the wearer’s problem.

That is why we developed the Signia Assistant.

Signia Assistant is both, the tool for the modern audiologist, and the tool for the modern wearer.

If a wearer is in a situation where they can’t hear their conversation partner, a restaurant for example, they can simply click on the Signia Assistant in the Signia app. The Assistant will then analyze the environment they’re in, and suggest a new setting, made to improve that specific situation.

With the Assistant, the wearer can instantly participate in the conversation again, there and then, not two weeks later at the follow-up appointment.

As the wearer agrees to keep the new setting, happy to hear better, the Assistant will remember that individual’s preference, always optimizing the settings to perfectly tailor the sound to that unique wearer.

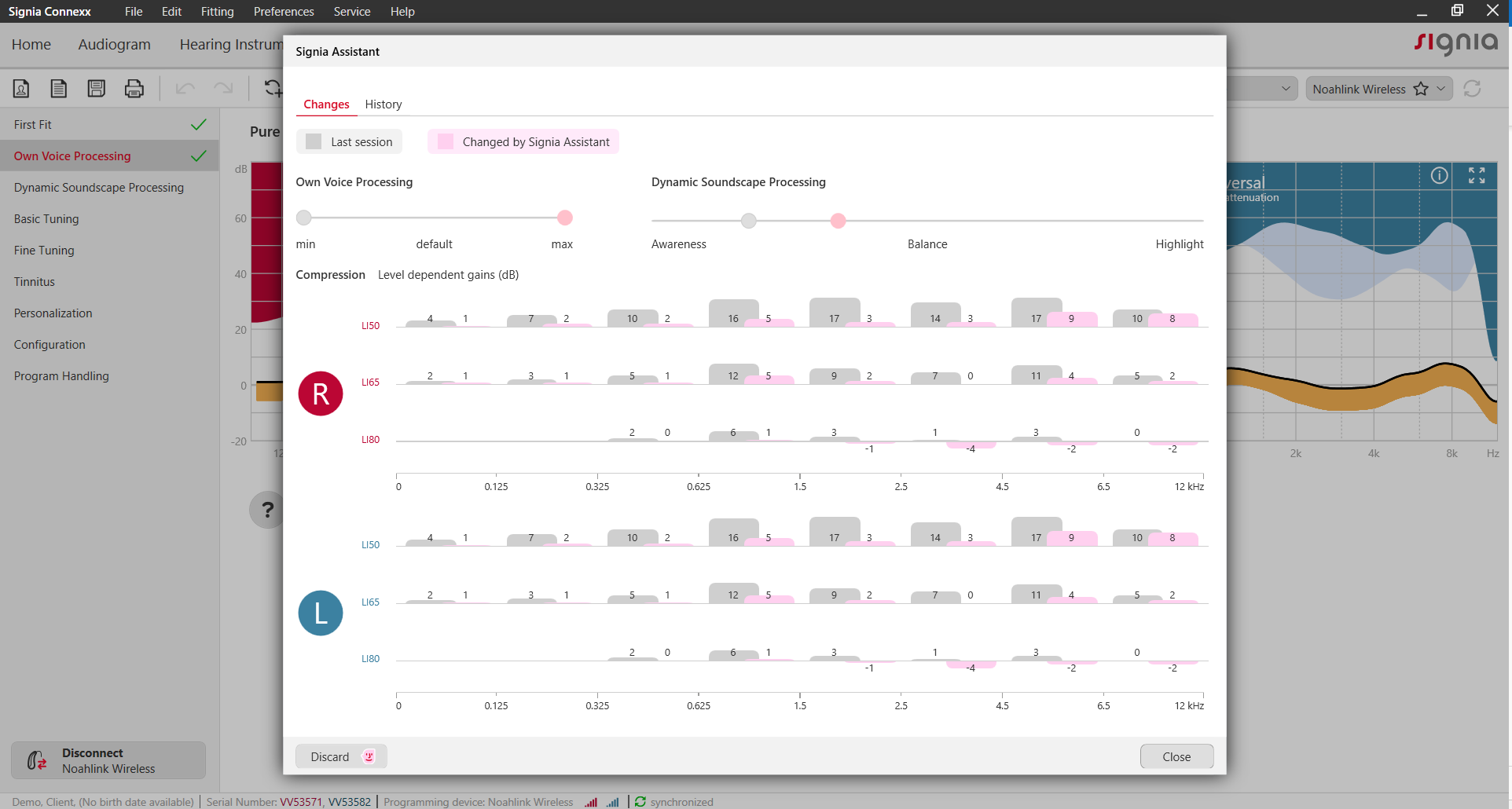

When the wearer does return however, the HCP will see an overview of all the changes done by the Assistant; with this whole new level of insight, the HCP can achieve more precise fittings than ever before. It supports both the wearer and the HCP in finding that perfect sweet spot for every individual person.

While focusing on keeping the usability as easy as possible, so that anyone can use it, the magic truly happens in the background.

When the Signia Assistant is activated for support by a wearer, the analysis of that given environment is compared to thousands of similar events globally and based on highly advanced machine learning algorithms, the solution with the highest success rate for that situation is suggested to the wearer.

This means that every time the Signia Assistant is being used, it gains more knowledge and improves the support for people in similar situations all over the world. In parallel, it learns the preferences of every unique wearer, to create the optimal tailored solution for each and every one.

It’s fair to say that the Signia Assistant is the next level in modern optimized care.

The Signia Assistant is found in two places:

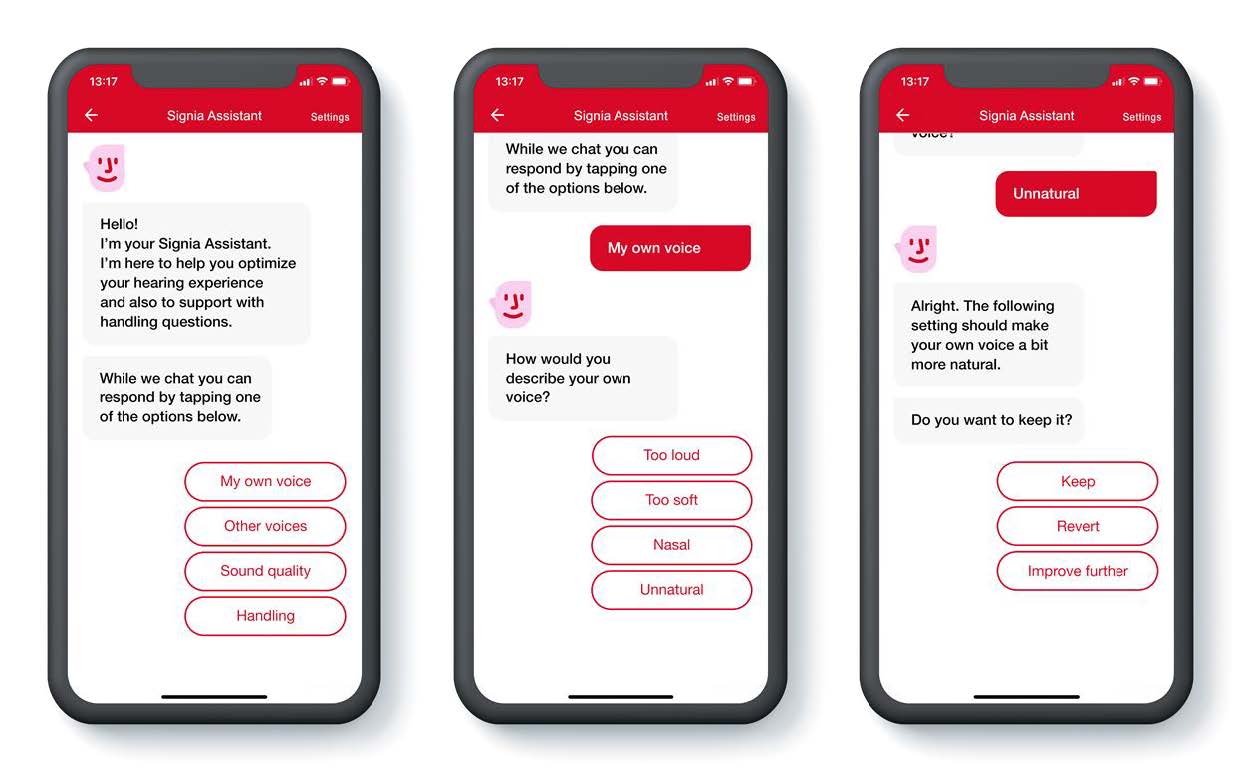

• In the Signia app, for the end user (Fig.2), available for all supported Signia Xperience devices with Bluetooth.

• In the fitting software Connexx, for Hearing Care Professionals (Fig.3 below).

For more detailed instructions on usage, please see “How to use Signia Assistant” (Wolf, V. 2020)

When the wearer is in a situation where they have an issue or want to change their hearing experience, they can click on the Signia Assistant icon in the Signia app.

When the wearer opens the Signia Assistant for support, the Assistant reads out the environment the user is currently in from the hearing aid(s). The user can then choose their issue from a simple menu provided by the Assistant. If the user is in a noisy restaurant for example, and reports that they can’t hear what’s being said, the Signia Assistant will make changes to the hearing aids to optimize their listening experience for this and similar environments. An example of such a change could be that the Dynamic Soundscape Processing (DSP) slider would be moved towards more focus, increasing directionality and noise reduction, and/or adjust frequency shaping to optimize speech intelligibility. All the changes are based on both over 100 years of experience in the hearing aid industry and what researchers around the world have found as most suitable, but also on what other Signia Assistant users reported worked best for similar cases.

The wearer is given the option of keeping the new suggested settings or decline (go back to previous setting). The more people that choose to keep a certain setting in a certain situation, the more confident the Assistant gets in the success of that solution. If more people reject a suggested change, that change is rated lower and the Assistant will choose a solution with higher success rate for the next person using the Assistant. This way, every user of the Signia Assistant globally contributes to improve the solution for everyone else (there is of course the option of opting out of contributing to the anonymized global data learning). The result is that the user can improve their hearing instantly within seconds and participate in the situation they were previously struggling with.

To understand better how this actually works though, we need to dig a bit deeper.

There are a lot of things that are currently called artificial intelligence, or AI. It has become a big buzz-world lately, and solutions that were partially invented decades ago are given this label, now. There has been, however, really significant progress in the methods around artificial intelligence lately, which enabled technologies such as voice assistants and autonomously driving cars. All have in common, that they can make use of vast amounts of data that was previously collected (and is collected by their users to improve the service further).

At the core, most of these solutions are based on artificial neural networks, as is the Signia Assistant. Artificial neural networks are strongly inspired by how the human brain works: they are constructed of a vast number of individual neurons that have communication paths in-between and can be trained to learn relationships. For instance, to learn that in loud environments, it is sensible to change the compression of the loud input levels, if it is perceived as too loud or too soft.

But how does such a system learn these relations? Don’t worry, we won’t unleash hundreds of mathematical formulas here – it is not necessary to understand that in-depth level of detail. Such machine learning systems (and neural networks are one kind of these) learn by having thousands and thousands of examples to learn from. Training them is much like teaching kids in school – at the beginning, they will only be able to guess what the correct answer is, but once they have made a couple of mistakes and learned from them, they will be a true master at the job. Of course, when starting such a system, we do not want the Assistant to learn from the ground up with our customers. This is where audiology research comes into play: There is, in fact, quite a lot of literature on what typical customer complaints when being fitted with hearing aids are, and what solutions are most suitable for these. With these results, we were able to generate some training material for the Signia Assistant. But there is more: Proper parametrization of a hearing aid requires good insights into the algorithms that it offers – this is what literature was not able to offer. Luckily, this is what a hearing aid manufacturer has plenty of in its research and development departments, and it is also what previous guidelines such as the Fitting Assistant in Connexx were based upon.

Equipped with all of these insights, we were able to compile a huge amount of training material to educate our Assistant. Besides information about the environment (level, acoustical classification), this also includes information about individual factors (e.g., hearing loss). For the initial training, the Signia Assistant is thus provided with a vast number of examples. Those examples exactly mimic real wearers giving positive or negative feedback to a change provided by the Assistant. So, in fact, when interacting with the first real end-user, the Assistant has already mastered thousands and thousands of “virtual” end-users – and is therefore definitely not starting from scratch.

With this, the Signia Assistant is able to provide instant relief from the vast majority of problems from day one. But hearing is also highly individual, and a change in parameters that is appreciated by 99% of users in a given situation might be just not good enough for the remaining 1%. To account for this, the Assistant strongly incorporates previous interactions with the user, and can thereby identify quickly which changes works and are appreciated by that specific individual. These personal preferences are then naturally taken into account for the next proposed solution.

With data from all around the world coming in, the Assistant is able to fine-adjust itself over time significantly. This also includes the detection of relations that were previously completely unknown: for instance, it could find that certain solutions do not work as well as described for certain languages, or it could identify groups of individuals that have different compression preferences in loud situations. These data-driven approaches to hearing-aid fine-adjustment acknowledge that there might be more to know than we can currently grasp. And neural network approaches can be quickly adapted to reflect newly acquired knowledge, even without the need to describe those relations verbally. Just like our brain. These are all the behind-the-scenes activities of the Signia Assistant.

Since the fitting for most, especially calm situations, is routinely done very well by hearing care professionals, the optimization of the Signia Assistant focuses on situations that are not reflected by this – and hence needs to offer solutions that cater for this situation-dependency. We know that most hearing aids are fitted with a single hearing program. For those fitted with multiple programs, the majority predominantly uses the first, universal hearing program with its automatic control. This is not surprising, since interaction with the hearing aids is something typically not desirable – they should just work.

The Signia Assistant is tailored for this need: It will only touch settings that are part of automatic control algorithms, in particular Dynamic SoundScape Processing, Own Voice Processing and compression. This ensures that the wearer will not be disturbed by sudden changes due to a novel acoustic environment being detected. It further enables a stand-alone solution that works without the need for a Bluetooth connection after the dialog with the Assistant. It is, however, still highly situation-dependent, as the algorithms incorporate the current situation in their control logic. Let us assume that the user is at a loud restaurant and tells the Assistant that the sound is too dull, here. It makes sense to assume that only the current situation is meant by this, and that the overall hearing aid sound is fine – since it was tuned by a professional with usually years of experience. By only increasing high frequent sounds with high input levels, the settings of the hearing aid in soft and medium situations are untouched, however the wearer gets a crisper signal instantly in the situation.

Besides the increased satisfaction of better tailored devices to the individual and instant 24/7 support, the HCP can get a completely new level of insight into their user’s hearing success. As the user returns to the clinic, the HCP can read out how the user expressed personal preferences or needs through change in directionality, noise reduction, own voice or gain. This gives a quantified overview never previously available, providing the HCP with a completely new basis of doing fine adjustments. There is naturally also the option of rolling-back changes if so desired. For those who have used the Assistant for real world insights, it’ll be hard to go back to the “old world” of simply not knowing.

The Signia Assistant is available for all supported Signia Xperience hearing aids.*

*Check local availability with your Signia representative.

Wolf, V. How to use Signia Assistant (2020).

Erik Harry B. Høydal has been with WS Audiology since 2014. Besides his audiological clinical background, Erik has been involved in research on musicians and tinnitus. He did his MSc in Clinical Health Science at the Norwegian University of Science and Technology (NTNU), and has also worked as a teacher at the Program for Audiology in Trondheim. Høydal has worked politically with awareness of hearing impairment through his engagement in The Norwegian Association of Audiologists. Having joined WS Audiology in Erlangen 2016 his work includes scientific research and portfolio management.

Marc Aubreville is an engineer who received his M. Sc. (Dipl.-Ing.) from Karlsruhe Institute of Technology in 2009, shortly before joining Sivantos as a signal processing engineer. After seven years of developing directional processing algorithms, automatic steering and binaural signal processing algorithms, he became a system architect in 2017. At the same time, he started a PhD in part-time at Friedrich-Alexander-Universität Erlangen-Nürnberg in the field of artificial-intelligence-based computer-aided tumor diagnostics, which he finished in 2020.