Split-processing: A new technology for a new generation of hearing aid

Eric Branda Au.D., Ph.D.

Audiology Practices 13(4), 36-41.

Hearing aids are continually evolving with every platform introduction. Each generation of hearing aid technology incorporates new approaches to managing the listening environment for the user. This is often a combination of chip design and algorithm development. Essentially, the goal is to provide the best speech intelligibility possible for each situation. This becomes more challenging with multiple talkers and more adverse noise conditions. Adding to the complexity of signal processing is that one cannot always assume the conversation partner is positioned in front of the hearing aid user. The hearing aid needs to dynamically adapt and adjust to the listening situation.

Recently, Signia introduced the AX platform of hearing aids to take the next step managing and augmenting the listening experience. The AX platform builds on former platforms, but also incorporates new approaches to processing. Part of the AX development was to look at how other industries approach sound and explore opportunities to expand on previous approaches.

Soundscape Analysis

A key component of AX processing is the analysis of the listening soundscape. Traditional processing uses modulation and level-based approaches to determine a classification of the listening environment of the wearer. Basically, hearing aids use a few select acoustic parameters to designate the soundscape into a limited number of classification buckets. Once in these classification buckets, appropriate algorithms can be applied. The obvious limitation is that soundscapes are extremely dynamic and far exceed the few classification categories commonly used.

The AX processing takes those factors of level and modulation into account in identifying the acoustic environment. However, other factors can further assist in evaluating the soundscape. Additional factors that AX analyzes are the signal-to-noise ratio, if the user is talking, overall ambient modulation of the environment, and if the hearing aid wearer is stationary or in motion. These additional factors allow for a more dynamic approach to managing the soundscape beyond a limited number of classification categories. Some of these features are implemented in a unique manner in the AX platform.

Motion Sensors

One of the unique tools used by the AX platform is an integrated motion sensor. Motion sensor technology is relatively new to hearing aids. The driving concept for using the motion sensor is to help identify the listening needs of the hearing aid wearer. In a typical conversation, the communication partner is directly in front of the hearing aid wearer. If one assumes a listening environment such as a restaurant or café, it is expected that it is a face-to-face conversation and there is likely some competing background noise. The hearing aid would consequently apply some directionality and noise reduction to help focus on the talker facing the hearing aid user and reduce the competing background sounds. However, if both parties are walking through the same listening environment while talking, the acoustic background has not changed, but they are no longer face-to-face. The listening situation, not the environment, has changed. The hearing aid now must adapt to speech coming from a different angle and provide for safety of the wearer while walking. In this example, directional microphones may be more of a hinderance than a benefit. For this situation, a motion sensor can detect that the hearing aid wearer is in motion and make the microphone more omni in nature. Using motion of the wearer to determine microphone mode helps with accessibility to sounds from the sides, even though the acoustic environment in this scenario is likely to be classified as a speech-in-noise situation.

Original approaches to motion sensors utilized those built into smart phones to detect motion. This information could then be transmitted wirelessly to the hearing aid to assist in the processing decisions. More recently, the motion sensor has been integrated into the hearing aid itself. The integrated motion sensor itself brings new challenges for the hearing aid platform. Along with the obvious need to detect motion, factors such as power consumption and device size also must be considered. Considering these factors, the AX platform uses an accelerometer to detect motion. The accelerometer essentially identifies changes in the wearer’s acceleration in multiple directions while minimizing power consumption and space constraints (Branda and Wurzbacher, 2021).

Studies investigating the use of motion sensors in hearing aids have shown advantages for the wearer. Froehlich et al (2019) reported both laboratory and field measures using technology supported by motion sensors. The laboratory condition assessed user ratings on ease of communication and listing effort with the motion sensor in “on” and “off” conditions. Two listening situations were simulated. One was a restaurant situation with speech from the side. The other was a traffic situation with speech from the side and behind the listener along with traffic noise. In both conditions, participants rated understanding and listening effort significantly better with the motion sensor activated.

Own Voice Processing

The AX platform also uses Own Voice Processing (OVP) to identify when the hearing aid wearer is speaking or if someone else is talking. It comes as no surprise that the needs of the wearer differ between when they are speaking and when they are listening. However, it is not so easy for the wearer to manage hearing aid gain and output differently for these situations. The use of OVP can help mitigate this situation. If the conversation partner is speaking, then the hearing aid processes for speech at the programmed gain levels. However, if the wearer is speaking, then the hearing aid will adjust gain and compression to accommodate for the wearer’s own voice.

A study investigating OVP evaluated own voice preferences for new hearing aid users (Powers et al, 2018). The users were asked to wear hearing aids in closed, vented and open conditions with OVP “on” and “off.” With each condition participants were asked to rate their preference on a seven-point Likert-like scale (1=Very Dissatisfied, 4=Neutral, and 7=Very Satisfied). In all conditions, the OVP “on” preference was significantly higher than with the feature deactivated. Additionally, the closed fitting with OVP “on” was rated more preferable than an open condition with OVP “off.”

Two New Approaches to Processing

Analyzing the soundscape is merely the first step in helping the hearing aid wearer hear well in any listening environment. The next step is how the hearing aid processes and manages the listening situation. The AX processor logically applies select algorithms for the listening environment as it identifies the situation. However, AX also takes two distinct approaches in how the processing is executed (Taylor et al 2021).

Processing in Parallel

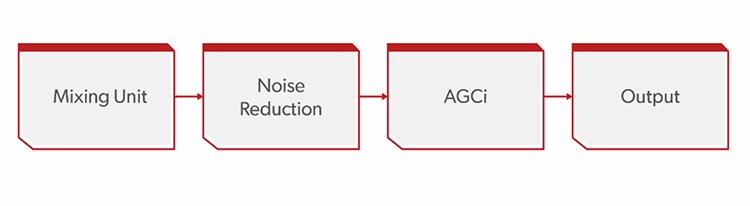

The first core aspect of AX processing is a departure from traditional serial processing approaches. With the traditional approach, the sound is processed in a serial, or step-by-step manner. For example, as shown in Figure 1, the digital noise reduction algorithm may be applied to the input signal first, followed by compression. This serial processing could result in some algorithms undoing or exacerbating effects of the other algorithms and could consequently introduce artifacts.

Figure 1. The serial processing pathway of sound found in all hearing devices except Signia’s AX.

Figure 1. The serial processing pathway of sound found in all hearing devices except Signia’s AX.

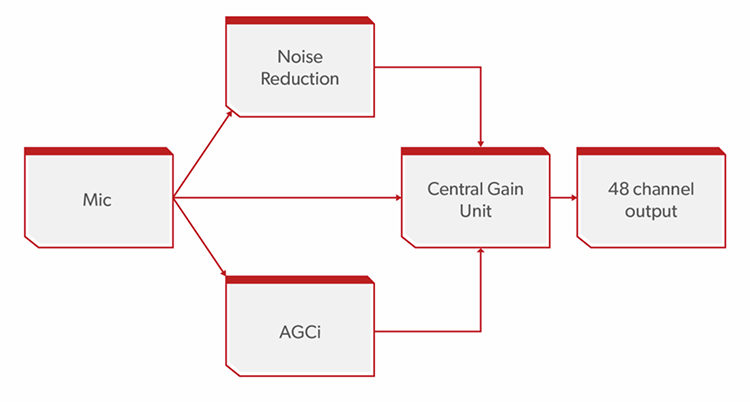

In contrast, the AX platform applies parallel processing, which is depicted in Figure 2. The signal is provided to each algorithm in a parallel fashion, so it is untouched by other algorithms. Following the application of the parallel processing, a central gain unit brings the entire signal together without the overlapping influences and artifacts. The result of parallel processing is a cleaner signal for the hearing aid wearer.

Figure 2. The parallel pathway of incoming sound in the AX platform. Note how the incoming signal is split compared to serial processing in Figure 1.

Figure 2. The parallel pathway of incoming sound in the AX platform. Note how the incoming signal is split compared to serial processing in Figure 1.

Split Processing

Along with the parallel processing, AX technology utilizes another innovative processing approach designed to address a common issue associated with hearing aids. Even with the most current technology, background noise is still an issue for hearing aid use (Picou, 2020). In general, digital noise reduction systems help with comfort in noise, but are not associated with improving speech intelligibility in noise. Improving speech intelligibility in noise is addressed with directional microphones.

Directional microphones, also referred to a beamformers, are used to improve speech intelligibility in background noise (Picou and Ricketts, 2019). Unilateral beamforming has been used more traditionally. In this case, the hearing aid has a front and rear microphone. Directional microphones (unilateral beamformers) are effective when the signal of interest, usually a person talking, is spatially separated from noise. Of course, in many demanding listening situations, it is impossible to spatially separate the talker(s) of interest from unwanted sound.

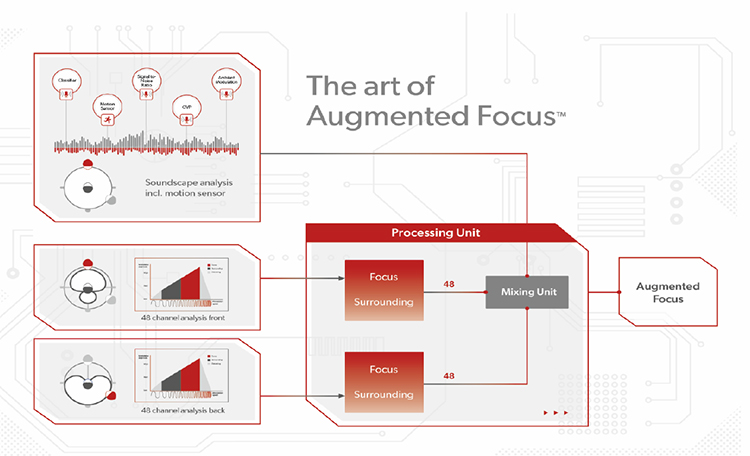

The AX platform, as mentioned, utilizes directional microphones. However, AX introduces a new approach to using the directional microphones. Traditionally, all input signals processed by the hearing aids would follow similar noise reduction and compression characteristics. That is, no matter if the input is speech, music or stationary noise like a fan, all the sounds are processed in essentially the same way — the input signal dominating the wearer’s soundscape would drive classification and compression characteristics. With directional microphones, this also meant that sounds from the rear direction would receive some additional attenuation as the sound was processed, however, the same overall noise reduction and compression characteristics would be applied regardless of the direction of a sound source. With AX, the directional microphones are used to identify front and rear input signals as separate streams and consequently apply separate processing to each stream. This means that target sounds from the front which are typically speech are likely to receive compression and noise reduction better suited to a speech signal. The competing sounds from the rear field will have different noise reduction and compression characteristics applied. Applying appropriate processing to each individual stream helps the hearing aid wearer more easily distinguish the target speech signal from the competing background signals. Figure 3 is a schematic demonstrating how the AX split processing works.

Figure 3. Augmented Focus, a new feature in the AX platform, separates input signals into two separate streams that are processed independent of one another. Each stream applies different amounts of compression and noise reduction.

Figure 3. Augmented Focus, a new feature in the AX platform, separates input signals into two separate streams that are processed independent of one another. Each stream applies different amounts of compression and noise reduction.

Bilateral Beamforming

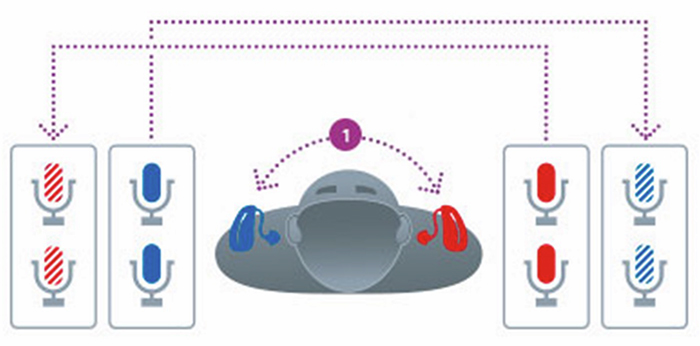

In addition to the split processing and parallel processing, the AX platform is designed with bilateral beamforming technology. In essence, each instrument in a pair of hearing aids has two microphones, just as with a unilateral beamformer. However, bilateral beamforming differentiates itself by utilizing the wireless connection between devices. Signia uses near-field magnetic induction to accomplish this full audio data sharing between devices, unlike other manufacturers who employ 2.4 GHz, which is prone to occasional sound quality issues when used for wireless communication between devices.

In bilateral beamforming, hearing aids share directional information to provide added benefit over the unilateral beamformer. Studies with earlier implementations of binaural beamforming have shown better speech understanding in noise for the hearing aid wearers – even when compared to adult listeners with normal hearing (Froehlich et al, 2014). It is worth noting that full binaural beamforming is often applied in the presence of loud background noise — typically when the intensity level reaches just over 70 dB SPL for about ten seconds, the bilateral beamforming system is engaged and the hearing aids go into a narrow directional mode. This application of bilateral beamforming is based on research that says that in situations with lower levels of background noise, the hearing aid should still provide some audibility for surrounding sounds and thus remain in a more omnidirectional pattern. Figure 4 illustrates the full audio data sharing capabilities of Signia’s bilateral beamforming system and that in listening situations of relative high intensity, the directional pattern is quite narrow.

Figure 4. A key to bilateral beamforming is the combined analysis of the input of all four microphones independently for both the right and left ear—the combined advantage of an 8 microphone array that provides a narrow directional pattern when needed

Figure 4. A key to bilateral beamforming is the combined analysis of the input of all four microphones independently for both the right and left ear—the combined advantage of an 8 microphone array that provides a narrow directional pattern when needed

AX Research Support

With the introduction of the AX platform, several studies have been conducted to evaluate AX performance in laboratory and real-world environments.

In one laboratory study, experienced hearing aid wearers compared AX processing with that of other newly introduced hearing aid technologies (Jensen et al, 2021a). The hearing aid wearers performed a modified American English Matrix test (Hörtech) using speech and laughter as the competing signals. With the AX processing, the hearing aid wearers scored significantly lower (better) speech reception thresholds than with other devices.

In the same study, the performance with AX processing with these same hearing aid wearers was compared to that of normal hearing participants using the modified American English Matrix test in two different listening situations. The first was designed as a moderate noise situation, similar to a cocktail party situation, and the second was designed as a louder, restaurant situation. For the moderate noise situation, the hearing aid wearers performed equivalently to normal hearing listeners. And for the louder noise situation, hearing aid wearers performed significantly better than the normal hearing listeners, consistent with previous investigations with binaural beamformers.

In a separate study (Jensen et al, 2021b), satisfaction ratings of experienced hearing aid users wearing their current hearing aids and AX platform hearing aids were compared. They were asked to first rate their own devices on a Likert-type scale (1 = “very unsatisfied”, 7 = “very satisfied”) with six categories as well as thirteen questions related to quality of life from the Speech, Spatial and Qualities of hearing questionnaire (SSQ, Gatehouse and Noble, 2004). Participants were then asked to follow a specific wearing schedule. They wore the new AX devices for two weeks followed by their own devices for one week and the AX devices again for one week. At the end of each wearing session they were asked to complete the same questionnaire. After the fourth and final wearing session they were also asked to complete forced choice preferences between devices for six different categories.

Results of the satisfaction scale showed a significant difference in favor of the AX platform for overall satisfaction, sound quality, speech intelligibility, and own voice. Questions regarding comfort and fit were not significant. For results of the SSQ questionnaire, all but one category were significantly different, and again in favor of the AX processing. The final forced choice questions indicated a 73% to 80% range of preferences for the AX platform and an overall preference of 78% for AX processing.

Conclusion

The AX platform introduces new processing approaches in addition to implementing proven technologies from previous generations. Laboratory and real-world investigations have shown benefits with the AX platform when compared to other technologies. In some listening situations, AX platform technology has been shown to outperform normal hearing listeners. It is with these innovations that AX brings technology to a new level while raising the bar for future generations of hearing devices.

References

Branda E, Wurzbacher T. Motion Sensors in Automatic Steering of Hearing Aids. Semin Hear. 2021 Aug;42(3):237-247. doi: 10.1055/s-0041-1735132. Epub 2021 Sep 24. PMID: 34594087; PMCID: PMC8463121.

Froehlich M, Freels K, Branda E. Dynamic Soundscape Processing: Research supporting patient benefit. AudiologyOnline. https://www.audiologyonline.com/articles/dynamic-soundscape-processing-research-supporting-26217. Published December 16, 2019.

Powers T, Froehlich M, Branda E, Weber J. Clinical study shows significant benefit of own voice processing. Hearing Review. 2018;25(2):30-34.

Taylor B, Høydal EHB. Backgrounder Augmented Focus. Signia white paper. https://www.signia-library.com/scientific_marketing/backgrounder-augmented-focus/. Published May 31, 2021

Picou EM. MarkeTrak 10 (MT10) survey results demonstrate high satisfaction with and benefits from hearing aids. Semin Hear. 2020;41(1):21-36

Picou EM, Ricketts TA. An evaluation of hearing aid beamforming microphone arrays in a noisy laboratory setting. Journal of the American Academy of Audiology. 2019;30(02):131-144. doi:10.3766/jaaa.17090

Powers TA, Fröehlich M. Clinical results with a new wireless binaural directional hearing system. Hearing Review. 2014;21(11):32-34

Jensen NS, Hoydal EH, Branda E, Weber J. Augmenting speech recognition with a new split-processing paradigm. Hearing Review. 2021;28(6)24-27

Hörtech website. International Matrix Tests: Reliable audiometry in noise. https://www.hoertech.de/images/hoertech/pdf/mp/Internationale-Matrixtests.pdf

Jensen NS, Powers L, Haag S, Lantin P, Hoydal EH. (2021). Enhancing Real-World Listening and Quality of Life with New Split-Processing Technology. AudiologyOnline, Article 27929. Retrieved from http://www.audiologyonline.com

Gatehouse S, Noble W. The Speech, Spatial and Qualities of Hearing Scale (SSQ). Int J Audiol. 2004;43(2):85-99. doi:10.1080/14992020400050014