Evidence Supports the Advantages of Signia AX' s Split Processing

Brian Taylor & Niels Søgaard Jensen

Introduction

Recently, Signia introduced a new innovative processing algorithm, Augmented Focus™.1,2. Briefly stated, this processing, enabled by Signia’s beamformer technology, splits incoming acoustic signals into two separate streams. One stream predominately contains sounds coming from the front of the wearer, while the other stream contains sounds arriving from the back. For each stream, a dedicated processor is used to analyze the characteristics of sound from every direction and determines whether the signal contains information the wearer wants to hear in the Focus stream, or unwanted or distracting sounds, which are processed separately in the Surrounding stream. In addition to the expected signal-to-noise ratio (SNR) benefits, split processing also enhances the wearer’s perception of background sounds by providing a fast and precise adjustment of the gain for surrounding sounds. This improves the stability of background sounds, and consequently, the wearer’s perception of the entire soundscape is enhanced. For a complete review of the processing behind Augmented Focus, see Best et al.1 and Jensen et al.2

Whenever a new hearing aid technology is introduced, it is incumbent upon the manufacturer to ensure that it is efficacious, effective and, most importantly, efficient. This is accomplished through a series of rigorous benchtop, laboratory, clinical and real world research protocols, summarized in this white paper. Efficacy is the capacity of a given intervention under ideal or controlled conditions to lead to a successful wearer outcome. Effectiveness is the ability of an intervention to have a meaningful effect in typical, real conditions, experienced by wearers, while efficiency is the ability to make decisions and recommendations in the clinic that lead to a cost-effective outcome for the wearer. To determine effectiveness and efficiency, clinical studies usually involve testing with individuals with hearing loss including objective measures, speech recognition and listening effort assessment, and real-world self-assessment of benefit and satisfaction. In this regard, Signia’s Augmented Focus technology has been evaluated extensively over the past two years, and in this report, we briefly summarize much of the research evidence that has been collected supporting its wearer benefit.

Predicted SNR Benefit

Benchtop testing to establish the efficacy of the Signia split processing was conducted via electroacoustic measurements using the KEMAR.3 The speech signal employed was the International Speech Test Signal (ISTS; presented from the front of the KEMAR) and the background noise was the International Female Noise of the EHIMA (presented from behind the KEMAR). Using an internationally recognized electroacoustic phase inversion procedure,4 it was possible to calculate the resulting output signal-to-noise ratio (SNR) following the signal processing of the hearing aids-that is, what would be expected in the ear canal if the instruments were worn by an individual.

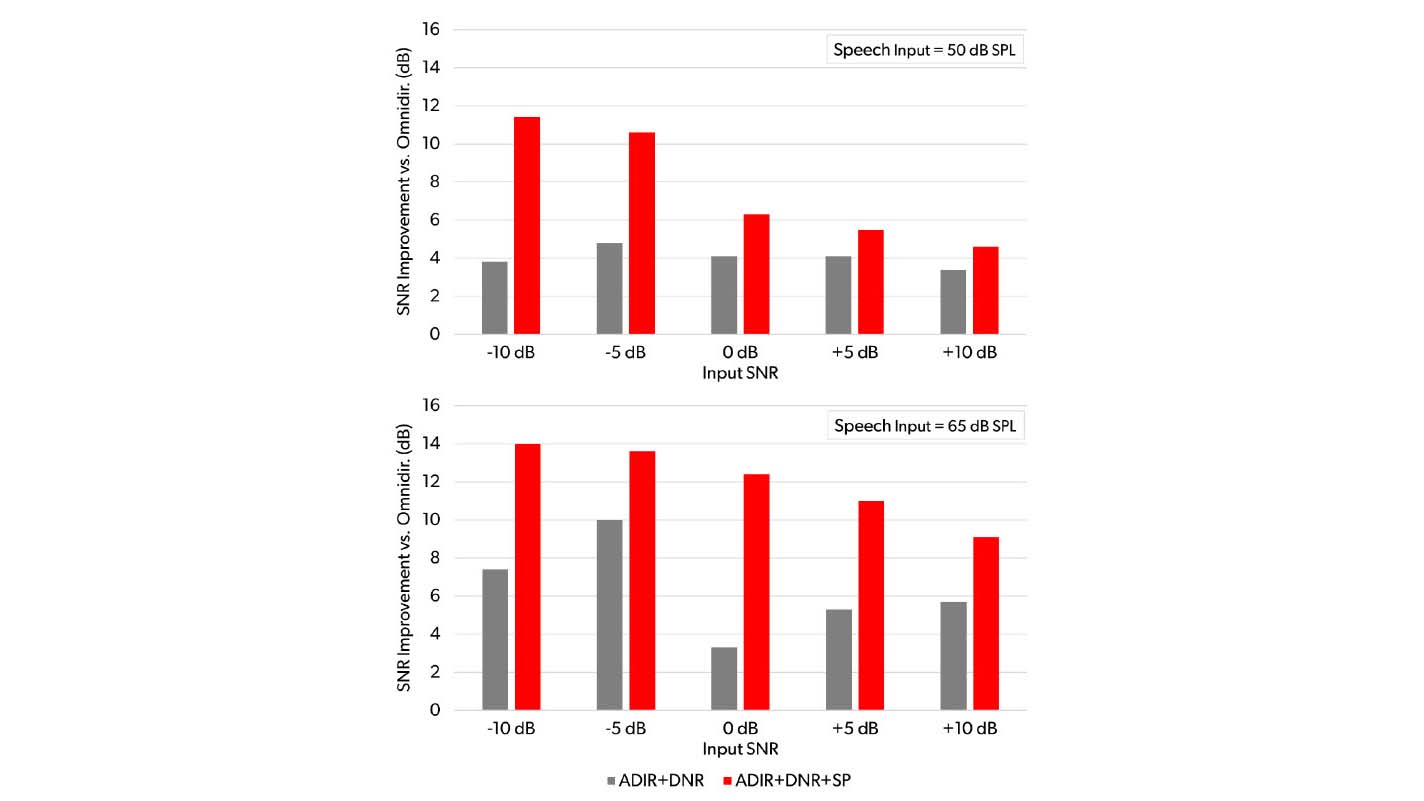

Measurements were carried out for three different programming conditions of the Signia AX product: a) omnidirectional without digital noise reduction (DNR), b) adaptive directional (ADIR) and DNR, and c) adaptive directional, DNR, and split processing (fully functional Augmented Focus). The hearing aids with the different special feature settings were all programmed to the NAL-NL2 fitting targets for the same common downward sloping hearing loss. The speech signals were presented at different input levels and output SNRs, weighted by speech importance at different frequency bands, were calculated for input SNRs of-10, -5, 0, 5 and+10 dB (see Korhonen et al.3 for a complete description of the procedures).

The results of the SNR output calculations are shown in Figure 1. Displayed are the SNR improvement values, when compared to baseline omnidirectional SNR for the ADIR+DNR and the ADIR+DNR+split processing (SP) settings. Observe that when the speech input signal was 50 dB SPL (see Figure l, upper panel), the advantage for ADIR+DNR processing was ~4 dB for all five input SNRs. The advantage for ADIR+DNR+SP was slightly better for the more favorable input SNRs, but the advantage was a significant l 0-11 dB for the input SNRs of-5 and -10 dB.

When the input speech signal was increased to 65 dB SPL (see Figure l, lower panel), the split processing advantage (compared to omni) increased significantly, with a benefit of at least 9 dB for all input SNRs tested. Note that for the common SNRs encountered in the real world, 0 dB and +5 dB, the addition of split processing increased the SNR benefit by ~6 to 9 dB, when compared to ADIR+DNR without split processing.

Figure 1. Shown is the improvement in the calculated output SNRs for the ADIR+DNR condition, and the ADIR+DNR+Split Processing (SP) condition, when compared to omnidirectional processing. Results shown for two speech input levels, 50 dB SPL and 65 dB SPL (upper and lower panel) and five different input SNRs, -70 to+ 70 dB.

Electrophysiology Evidence

As part of the complete assessment of new hearing aid technology, it is of interest to conduct electrophysiological studies to determine if indeed the new technology improves how the sounds are processed by the auditory centers in the brain. For example, when the Signia bilateral beamforming technology was introduced in 2017, studies measuring the electroencephalogram (EEG) revealed this processing significantly reduced listening effort.5 It was of interest, therefore, to determine if EEG-related studies also could be conducted to demonstrate the effectiveness of split processing. Further, electrophysiologic measures are useful because they directly reflect neural processing suggestive of increased acoustic challenge and, so, are more “objective” than listeners’ retrospective self-reports.

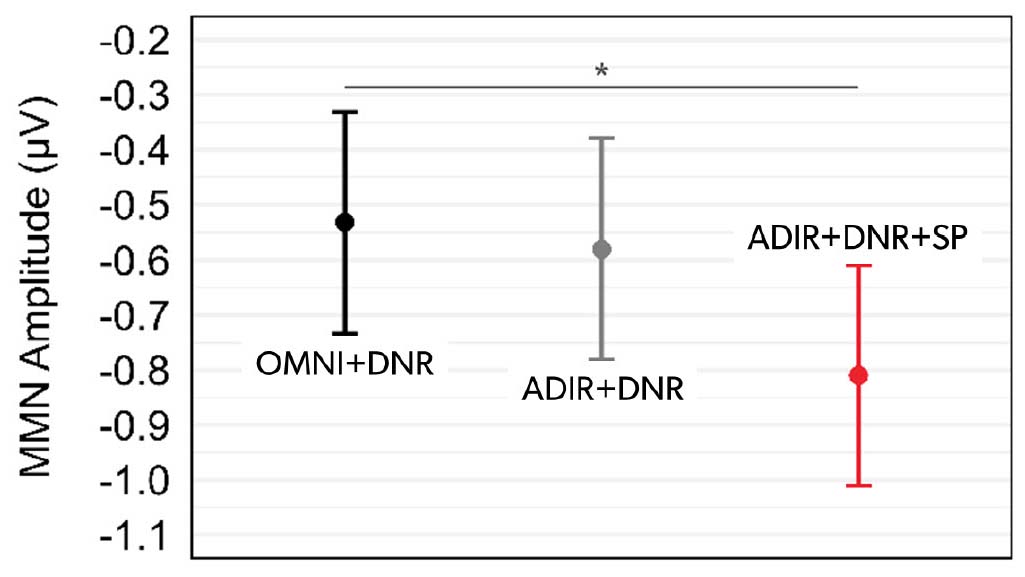

In the first study, the measure used was the auditory mismatch negativity, or MMN.6 The MMN is an evoked response that appears in EEG recordings as a brief negative deflection in amplitude following a sound that deviates from some established repetition.

Importantly, the MMN is a passive measure that does not require any type of active response from the participant. In essence, the larger the negative (or downward) waveform deflection, the more the brain is automatically detecting the change in the listener’s soundscape. The MMN typically is studied using an “oddball paradigm”, where a low-probability sound is irregularly interspersed among a series of highly repetitive sounds, and it provides evidence that the brain is able to automatically detect a difference between the infrequent and repetitive sounds.

Fourteen study participants with mild-to-moderate downward sloping hearing losses were fitted bilaterally to Signia AX hearing aids, programmed to NAL-NL2 prescriptive targets. Measurements were carried out for three different programming conditions of the Signia AX product: a) omnidirectional (OMNI) with DNR, b) adaptive directional (ADIR) with DNR, and c) ADIR with DNR and split processing (SP; fully functional Augmented Focus).

The speech stimuli were recordings of bisyllabic speech (/ba-ba/ and /ba-da/) produced by a male native English talker, presented at 65 dB SPL from a loudspeaker located in front of the listener. Simultaneously, temporally offset recordings of four-talker babble were played through each of two loudspeakers located behind the listener at 135° and 225°. To establish an initial individualized SNR, in preliminary testing listeners were presented with pairs of stimuli and asked to indicate whether they were the same (e.g., /ba-ba/ and /ba-ba/) or different (e.g., /ba-ba/ and /ba-da/). The level of babble noise was varied adaptively, so that this individualized SNR could then be used for MMN measures.

The MMN was evoked by the same phonetic contrast (/ba-ba/ vs /ba-da/) presented in the same noise at the SNR associated with each listener’s discrimination threshold for the split processing condition. Listeners were presented with a sequence of 800 bisyllabic speech tokens, of which 85% were standard (/ba-ba/) and 15% were deviant (/ba-da/). The order of standards and deviants was pseudo-randomized.

The measured MMN amplitude was then quantified for each participant as the maximum peak occurring after the second syllable in the difference wave calculated by subtracting the standard response from the deviant response. Statistical analysis revealed that hearing aid condition had a significant effect on the listeners’ MMN amplitudes amplitudes were enhanced (i.e., more negative) when split processing technology was active in the ADIR+DNR+SP mode, compared to the two other conditions without split processing (see Figure 2; for more detailed results see Slugocki et al6). These results suggest split processing enhances contrasts between sounds in the wearer’s soundscape compared to other hearing aid processing schemes-an important contribution to help Signia AX wearers understand speech in adverse listening situations.

Alpha EEG Activity

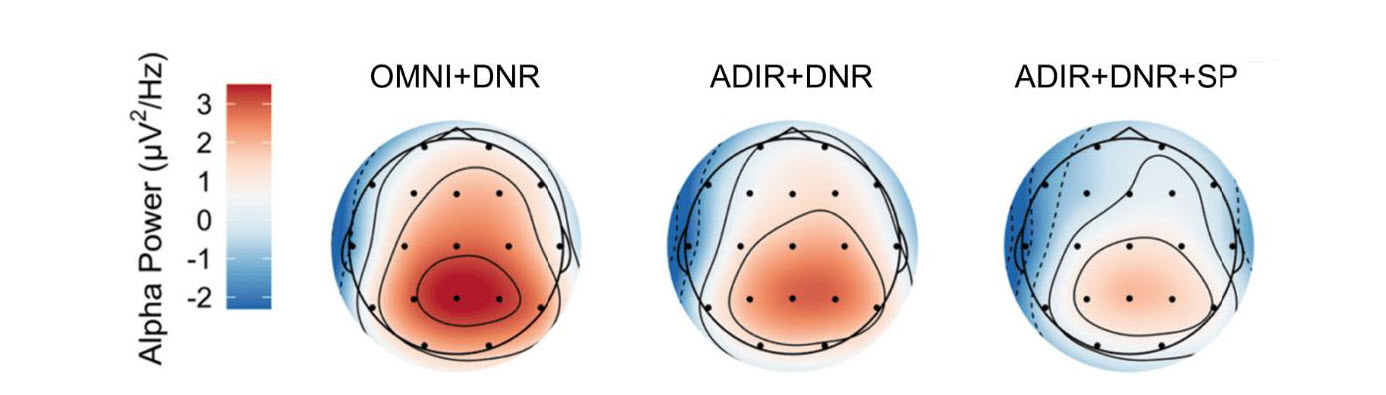

A second part of the EEG study evaluated the efficacy of split processing by measuring synchronous “alpha” activity over the parietal lobe of the brain to assess listening effort. Listening effort, generally defined as the allocation of cognitive resources to overcome the ill-effects of background noise, has been studied extensively in hearing aid research.

Recently, alpha wave EEG activity has generated some interest among researchers as a proxy for measuring listening effort. Specifically, increased alpha power (synchronization) has been correlated with the difficulty of speech tasks and with pupil dilation when listening to speech in noise at different SNRs.7 These findings have led researchers to suggest that synchronization in the alpha band reflects the action of inhibitory mechanisms that act to suppress task-irrelevant stimuli, such as visual stimuli or competing noise.8

In this study, 18 older adults who were native English speakers with symmetrical sensorineural hearing loss and normal cognitive function participated.9 Fifteen of the 18 participants were current hearing aid owners. Alpha activity was measured while aided listeners performed a modified version of the Repeat-Recall Test (RRT)-a speech in noise test used in research. On each trial of the RRT, listeners heard six sentences constructed at a 4th-grade reading level. Listeners were instructed to repeat each sentence just as they had heard it, to the best of their abilities. Sentence materials were presented at 65 dB SPL from a loudspeaker located directly in front (0° azimuth).

Simultaneous continuous noise was presented from two loudspeakers located behind the listener at 135° and 225° at one of three SNRs: -5, 0, or +5 dB. The SNR remained fixed at one level for each trial of six sentences. In addition, ongoing two-talker babble noise was presented from four loudspeakers (45°, 135°, 225°, 315°) at 45 dB SPL. The test hearing aid settings were the same as in the MMN study mentioned above.

Listener EEG was recorded during the RRT using 19 Ag/ AgCI sintered electrodes placed on the scalp. Alpha activity was quantified by power in the EEG spectrum between 8-12 Hz. Figure 3 shows the average scalp topography of listeners’ alpha activity across all SNRs for each of the three hearing aid conditions. Average alpha power measured over parietal (mid-to-back of head) electrodes, shown by the red shading, was highest when listeners were tested in the OMNl+DNR condition and lowest when tested in the Augmented Focus, split processing condition.

Figure 3. Average scalp topography of alpha activity measured in three different hearing aid conditions. Greater alpha activity is represented by deeper red shading. Black dots represent the placement of 19 electrodes used to record listener EEG.

Differences in EEG alpha power between these hearing aid conditions suggest that the advantages of Augmented Focus will lead to less effortful listening across a range of SNRs typical of difficult listening environments. The result of this study demonstrates, physiologically, how Signia AX’s split processing reduces listening effort in adverse conditions.

Noise Tolerance

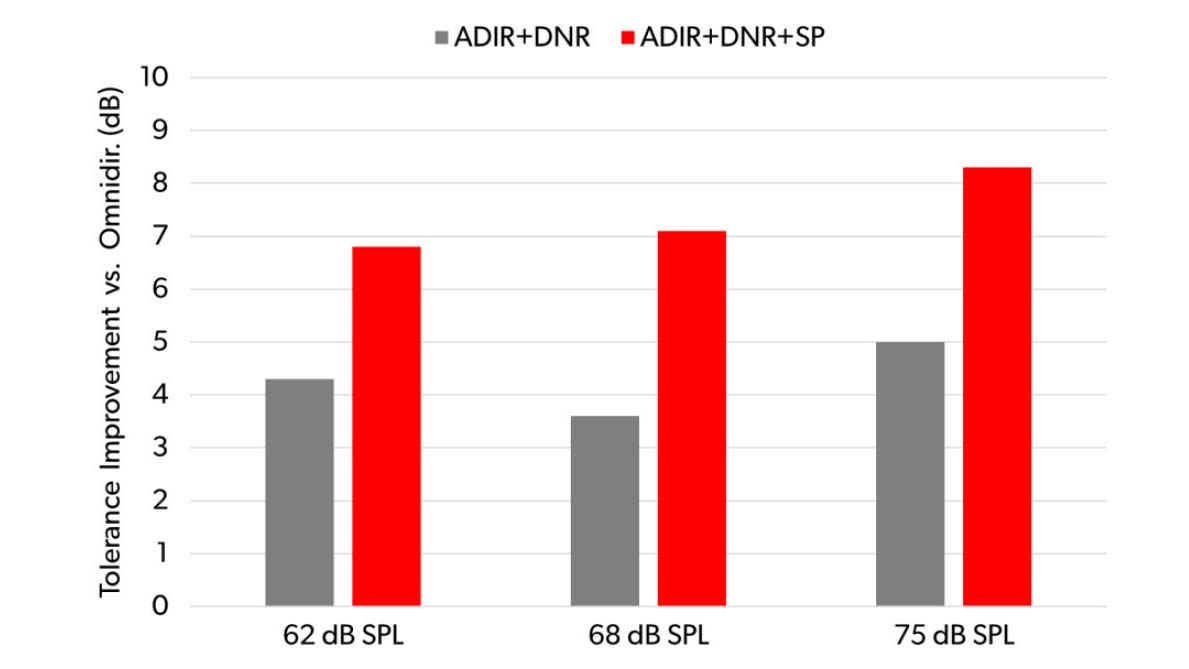

It is well established that the tolerance of background noise varies substantially among wearers, and that this can have an impact on hearing aid use, benefit and satisfaction10. For the past few decades, the measure used to assess this has been the acceptable noise level (ANL) test, which assesses the wearer’s acceptable SNR for speech signals presented at maximum comfort level. Research using the ANL test as the metric has revealed that directional technology and some hearing aid noise reduction schemes can effectively improve the ANL-that is, reduce the SNR that the wearer finds acceptable. A study, therefore, was designed to determine how the new Signia split processing would impact noise tolerance.11

This study used the multi-level Tracking of Noise Tolerance (TNT) test12 to compare Augmented Focus (split processing) to: a) omnidirectional with DNR processing, and b) adaptive directional with DNR. The participants all had bilateral downward sloping mild to moderately severe hearing losses, and the majority were experienced hearing aid wearers. The hearing aids were programmed for each individual to the NAL-NL2 prescriptive fitting method.

The TNT test included speech passages on different topics read by a male talker, presented from in front of the listener at different input levels. The background signal, presented from behind the listener, was an ICRA continuous speech-shaped noise, normalized to have the same peak RMS as the speech passages. For a given trial, the TNT test software presented a speech passage at one constant level. The initial noise level was set at 10 dB below the speech signal, and during the trial the ongoing noise level was adaptively controlled by the listener over a two-minute period. The participants were instructed to “monitor the noise and maintain the loudest noise level you can put up with while still understanding 90% of the words in the story.” For a complete review of the TNT procedures, see Seper et al.12

The results of the TNT testing for the three different hearing aid conductions are shown in Figure 4. Displayed are the mean acceptable noise level advantages (in dB) for the ADIR+DNR condition and the ADIR+DNR+SP condition (fully functional Augmented Focus). The advantage for the ADIR+DNR condition of ~4-5 dB is consistent with previous research for a noise signal originating from behind the listener.10 When split processing was added, however, observe that a significant increase of ~3 dB, with a 7-8 dB advantage over omni+DNR. An improvement in noise tolerance of this magnitude could have a significant impact on hearing aid acceptance, especially for new hearing aid wearers, where the introduction of audible background noise often is a deterrent to hearing aid use.

Figure 4. Shown are the mean acceptable noise improvement values (in dB) for three different speech input levels for ADIR+DNR processing and ADIR+DNR+SP compared to the baseline obtained for OMNl+DNR processing.

Lab-based Speech Understanding in Background Noise

To this point, we have reviewed the research evidence showing that Signia’s split processing improves the electro-acoustically measured output SNR at the ear canal, enhances brain responses to sound and significantly allows for greater noise acceptance. All of this would suggest that an improvement in speech understanding in background also would be present.

In laboratory testing, a restaurant situation was simulated, and individuals with hearing loss were tasked with repeating sentences in background noise; the SNR where 80% correct was obtained was determined.2 The participants were surrounded by the noise, with target sentences presented from the front. At varying intervals, recorded laughter of 76 dBA was presented from one of three randomly selected loudspeakers located behind the participant (135°, 180° or 215°). A few seconds after the onset of the laughter, the target sentence was delivered. After each sentence, there was a pause in the laughter, until it was again presented (from a different loudspeaker) to coincide with the next sentence.

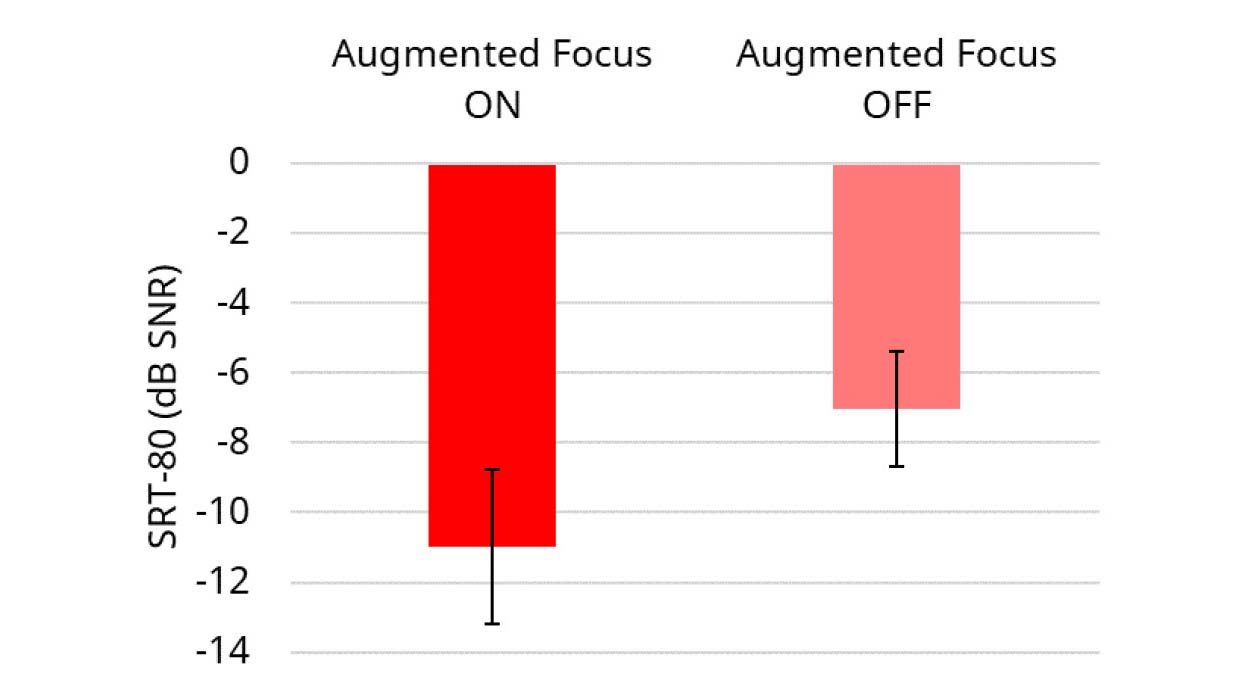

All participants were fitted with the Signia AX programmed to the NAL-NL2, for two different conditions: ADIR+DNR (Augmented Focus “Off”) and ADIR+DNR+Split processing (Augmented Focus “On”). The results for “On” vs. “Off” are shown in Figure 5. Observe that the addition of the split processing feature had a significant benefit for speech recognition, improving the SRT-80 by 3.9 dB SNR. Depending on the speech material and listening situation, an SNR improvement of this magnitude can improve speech recognition by 40% or more.10 Importantly, this is in addition to the advantages of Signia’s directional processing, which was active in the “Off” condition.

Figure 5. Mean speech recognition scores (SRT-80) with the new split processing feature “on” vs. “off.” Testing conducted in the Restaurant Scenario. Error bars represent 95% confidence intervals.

In the same study, with the Augmented Focus feature fully activated, the new Signia split processing was compared to two leading competitors, with all the competitors’ directionality and noise reduction activated.2 The same restaurant simulation test condition was used. The resulting mean SRT-80 for Signia was 2.1 dB (SNR) superior to Brand A, and 7.3 dB (SNR) superior to Brand B. Importantly, all products were programmed to the NAL-NL2 algorithm, verified with real-ear measures, to ensure that the differences observed were due to speech-in-noise processing, not variances in programmed gain and output.

Real World Field Study

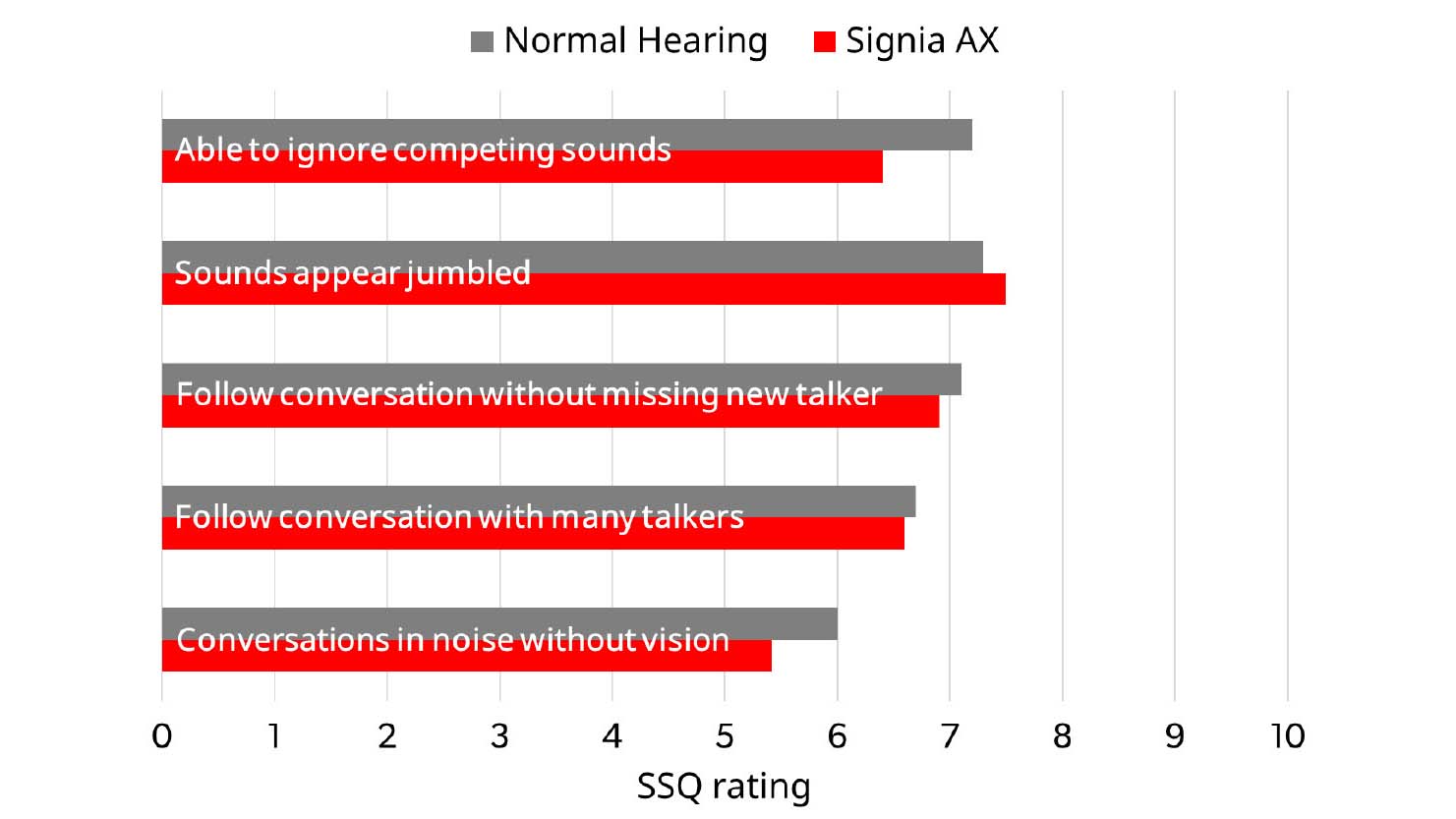

Up to this point, we have reviewed research/laboratory evidence supporting the efficaciousness of the split processing algorithm, but assessment of this product would not be complete without examining the effectiveness in the real world.10 In this study13, 37 participants used Signia AX hearing aids for several weeks, and at the conclusion of the trial, completed questions from the Speech, Spatial and Qualities of Hearing self assessment scale (SSQ).14 This scale uses a l 0-point rating for various listening situations involving speech understanding, sound quality and localization. What makes this scale especially useful, is thatthere are normative data available for older adults with normal hearing15-a close age-match to the participants in the current study. In this way, it is possible to determine if the new Augmented Focus technology assists hearing aid wearers accomplish their ultimate goal-to hear like someone with normal hearing.

In Figure 6, specific questions from the SSQ that involve listening in adverse conditions have been selected, and shown are the mean ratings from the study participants compared to those of the normal hearing group. Observe that in all cases, performance with Signia split processing was either equal to, or only slightly below, that of the normal hearing group.

Figure 6. Mean participant ratings when using hearing aids with the Augmented Focus processing are shown for selected questions of the SSQ, which involved speech understanding in background noise. SSQ norms for older individuals with normal hearing are displayed for direct comparison. For all items, higher ratings indicate better performance.

Plethora of Evidence Supports the Split Processing Advantage

When wearers want to communicate in adverse listening conditions, they often demand from hearing aids both better listening comfort and improved speech intelligibility. Over the past two decades, wearers have relied on a combination of adaptive directional microphones (ADIR) and digital noise reduction (DNR) algorithms to improve speech understanding and/or listening comfort in these situations. Signia’s split processing provides additional benefit in noise compared to these traditional features. This white paper summarizes a plethora of evidence supporting the efficacy and effectiveness of split processing using benchtop, electrophysiologic, lab-based and real-world studies. Collectively, these studies demonstrate a clear and consistent advantage for Signia AX’ s split processing over traditional forms of noise reduction technology.

Extending the Success of AX with NewFeatures

Recently, Signia expanded the AX platform with three new features.

The three new features-available for new and current Signia AX wearers-are all designed to enhance the hearing aid experience while fulfilling Signia’s goal to empower wearers with outstanding speech understanding in complex listening situations:

- Own Voice Processing 2.0 harnesses the split processing capability of the AX platform to process the patient’s voice separately from simultaneous ambient sounds for a more natural sounding own voice. A recent study indicated that 88% of participants were satisfied with the sound quality of their own voice with Signia AX with Own Voice Processing 2.0 at the initial fitting.16

- Auto EchoShield analyzes the listening environment and automatically adjusts to reduce bothersome echoes and reverberation, helping patients better understand speech in reverberant environments such as large auditoriums or even houses with tiled floors and high ceilings. That same study indicated that Signia AX with Auto EchoShield offers 30% less perceived reverberation compared to traditional processing and 27% less perceived reverberation compared to a main competitor.16

- Additionally, the Signia HandsFree feature enables patients with select iOS devices to hold conversations through their Signia AX hearing aids without having to speak directly into their phone or tablet-with exceptional speech clarity for the wearer and their conversation partner.

Given the abundance of clinical evidence, hearing care professionals should have confidence that when recommending technology to address the chief priority of wearers, improved hearing in background noise,17 Signia AX with Augmented Focus™ will lead to the most efficient wearer outcomes.

References

- Best S., Serman M., Taylor B. & Hoydal E. 2021. Augmented Focus. Signia Backgrounder. Retrieved from www.signia-library.com.

- Jensen N.S., H0ydal E.H., Branda E. & Weber J. 2021. Augmenting speech recognition with a new split-processing paradigm. Hearing Review, 28(6): 24-27.

- Korhonen P., Slugocki C. & Taylor B. 2022. Changes in hearing aid output levels and signal-to-noise ratios with noise reduction, directional microphone, and split processing. AudiologyOnline, Article 28224. Retrieved from www.audiologyonline.com.

- Hagerman B. & Olofsson A. 2004. A method to measure the effect of noise reduction algorithms using simultaneous speech and noise. Acta Acustica, 90(2): 356-361.

- Littmann V., Wu Y.H., Froehlich M. & Powers T.A. 2017. Multi-center evidence of reduced listening effort using new hearing aid technology. Hearing Review, 24(2): 32- 34.

- Slugocki C., Kuk F., Korhonen P. & Ruperto N. 2021. Using the Mismatch Negativity (MMN) to Evaluate Split Processing in Hearing Aids. Hearing Review, 28(10): 32-34.

- McMahon C.M., Boisvert I., de Lissa P., et al. 2016. Monitoring alpha oscillations and pupil dilation across a performance-intensity function. Frontiers in Psychology, 7: 745.

- Dimitrijevic A., Smith M.L., Kadis D.S. & Moore D.R. 2019. Neural indices of listening effort in noisy environments. Scientific Reports, 9(1): 1-10.

- Slugocki C. & Korhonen P. 2022. Split-processing in hearing aids reduces neural signatures of speech-in-noise listening effort. Hearing Review, 29(4): 20-23.

- Mueller H.G., Ricketts T.A. & Bentler R. 2014. Modern Hearing Aids: Pre-Fitting Testing. San Diego: Plural Publishing.

- Kuk F., Slugocki C., Davis-Ruperto N. & Korhonen P. 2022. Measuring the effect of adaptive directionality and split processing on noise acceptance at multiple input levels. International Journal of Audiology, 11: 1-9.

- Seper E., Kuk F., Korhonen P. & Slugocki C. 2019. Tracking of Noise Tolerance to Predict Hearing Aid Satisfaction in Loud Noisy Environments. Journal of the American Academy of Audiology, 30(4): 302-314.

- Jensen N., Powers L., Haag S., Lantin P. & H0ydal, E. 2021. Enhancing real-world listening and quality of life with new split-processing technology. AudiologyOnline, Article 27929. Retrieved from www.audiologyonline.com.

- Gatehouse S. & Noble W. 2004. The Speech, Spatial and Qualities of Hearing Scale (SSQ). International Journal of Audiology, 43(2): 85-99.

- Banh J., Singh G. & Pichora-Fuller M.K. 2012. Age affects responses on the Speech, Spatial, and Qualities of Hearing Scale (SSQ) by adults with minimal audiometric loss. Journal of the American Academy of Audiology, 23(2): 81-91.

- Jensen N.S., Pischel C. & Taylor, B. 2022. Upgrading the performance of Signia AX with Auto EchoShield and Own Voice Processing 2.0. Signia White paper. Retrieved from www.signia-library.com.

- Manchaiah V., Picou E. M., Bailey A. & Rodrigo, H. 2021. Consumer Ratings of the Most Desirable Hearing Aid Attributes. Journal of the American Academy of Audiology, 32(8): 537-546.