YourSound Technology and Dynamic Soundscape Processing

Matthias Froehlich, PhD

Although patient satisfaction with hearing aids has steadily increased over the past two decades to an all-time high of 83%1, the ability of hearing aids to improve the day-to-day functional capabilities of their wearers has additional room for improvement. This is especially true in situations where background noise is present, as, according to one recent survey, better hearing in noisy settings was the number one listening preference of the 10,000-plus adult hearing aid wearers surveyed2. For the past twenty years, the two key features used to improve the ability to hear in noise are directional microphone technology and digital noise reduction. Both have proven to be successful in improving speech understanding in background noise.3

Signia has a strong legacy of clinically proven noise reduction technology, including the implementation of Directional Speech Enhancement (DSE), first introduced commercially in 2012. Recall that Directional Speech Enhancement shared knowledge between two features: adaptive directional microphones and digital noise reduction to optimize listening comfort and speech understanding in a variety of background noises. Relying on what was at the time an advanced signal classification system, DSE enabled the adaptive directional microphone and digital noise reduction to work together to reduce background noise more effectively based on spatial location rather than signal modulation alone.4

As clinicians have come to expect, hearing aid technology has continued to evolve since DSE was introduced more than seven years ago. In previous models of Signia hearing devices, its signal classification system had a defined effect for directional microphone and noise reduction, dependent on the absolute noise level of the listening environment. Therefore, traditional noise reduction management systems had limited information about the wearer’s listening situations – in particular, they could not adapt to the listening intent of the wearer in complex acoustic situations.

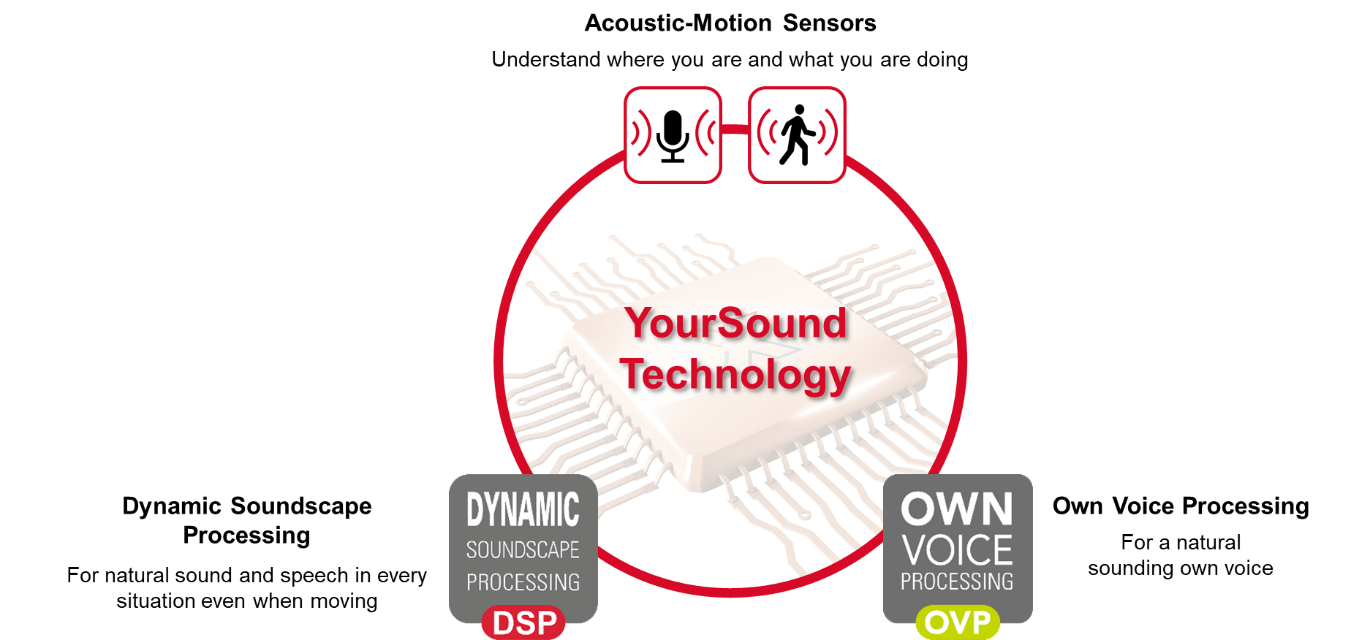

Signia Xperience devices take the next significant step towards “understanding” the listening intent of the wearer. YourSound technology is the realization of this comprehensive approach, consisting of the sensory analysis layer provided by Acoustic-Motion Sensors, the processing of environmental sounds in the Dynamic Soundscape Processing, and the Own Voice Processing to process the microphone input as soon as the system detects that the wearer is speaking.

Classification systems drive the decision-making process for any hearing instrument. The system classification determines what features, such as noise reduction and directional microphones, will be implemented for a given acoustic situation. Traditionally, even the most current systems use a classification system based on specific situations (e.g., Speech in Quiet, Noise, Speech in Noise). The application of the noise reduction and directional microphone systems is based on the classification itself as well as the overall sound level.

This approach of essentially categorizing the soundscape into buckets and managing competing signals in the environment has proven effective. However, with the broad scope of acoustical situations, instances could occur where compromises were made and an optimal setting was not achievable. For example, some features may have a stronger effect in a given situation than required, leading to unnatural sound and reduced spatial impression. Or, the effect of some features may not be strong enough in another situation, leading to insufficient listening support. Basically, the “discrete classes approach” provided too limited a base for the necessary automatic adaptations.

For this reason, YourSound technology replaces the approach of discrete acoustic classification by a comprehensive high-dimensional analysis system, designed to provide the best sound in any listening situation. The goal is to still maintain the best speech intelligibility possible for any given situation, but to also provide the listener with other environmental cues critical for maintaining a sense of awareness of the soundscape. It is based on an array of acoustic descriptors and motion information specifically designed to provide the most accurate machine understanding of any given acoustic situation, and the behavior of the hearing aid wearer in it.

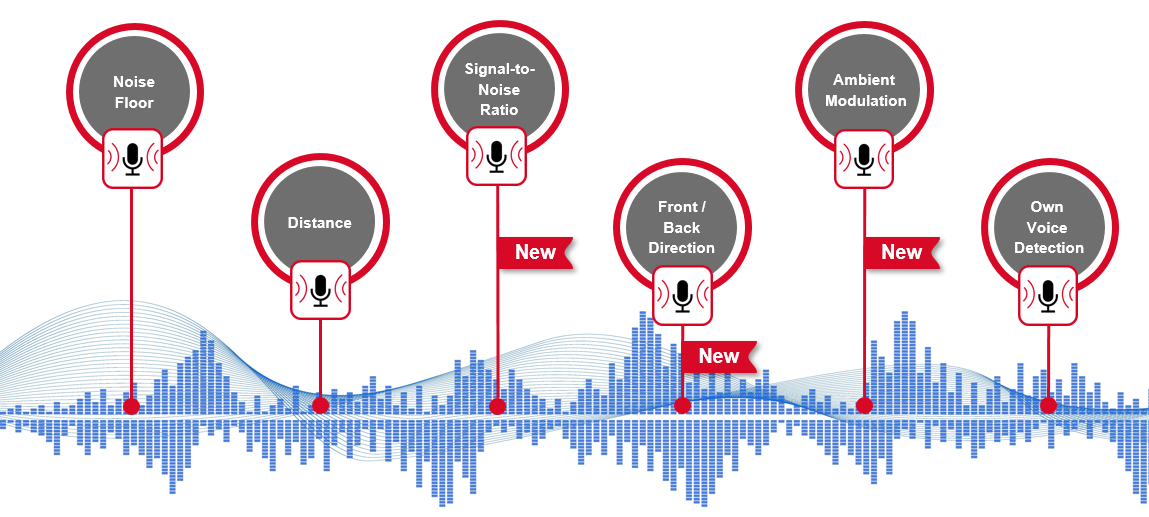

Noise Floor detection determines the overall level of the environmental noise. In a very noisy environment with a high noise floor, features like directionality and noise reduction need to be more aggressive to attenuate the background noise around the listener and make the overall listening experience better. When the noise floor is low, less effect of these features is required.

Distance estimates how far both target speech and environmental sounds are from the wearer. YourSound technology can adjust gain for sounds farther away from the wearer to prevent the perception that they are closer than they are. In this way, YourSound technology will help the wearer maintain spatial awareness of other environmental sounds.

Signal-to-Noise Ratio (SNR) analyzes the dominant speech signal in comparison to the competing noise in the environment, regardless of the environmental noise level. With a better understanding of the SNR, YourSound technology can determine if strong directionality and noise suppression is required as in the case of an adverse SNR, or if the wearer will have better performance in the soundscape with a less aggressive noise management system when the SNR is more favorable. And as this looks at the ratio and not just the noise level, even a low noise level with a poor SNR can trigger more directionality, where other systems would dismiss this as unnecessary.

Front/Back Direction provides analysis on the direction of both speech and noise signals. Identifying the direction of speech and noise signals, primarily the direction of various speech sources, provides better decision making for determining directional microphone strength in combination with environmental awareness. Speech from the front may require more aggressive directionality whereas speech from behind requires more relaxed directionality for spatial awareness.

Ambient Modulation estimates the degree of stationarity by looking at the mixture of signals (speech, noise or speech + noise) and determining the ratio of the ambient noise floor versus overall level and how the ratio fluctuates. In this way, Dynamic Soundscape Processing assures that signals with lower modulation are still heard, and are perceived as coming from the right direction.

Own Voice Detection (OVP) detects when the hearing aid wearer is talking. OVP then effectively reduces gain almost instantaneously for the wearer’s own voice. When not speaking, the necessary gain programmed to the device ensures the wearer has audibility for the environment. Ultimately, the traditional compromise between comfort of own voice and audibility is essentially eliminated, leading to high satisfaction particularly for first time hearing aid users both with respect to own voice acceptance and hearing instrument benefit.5, 6

Motion Sensors built into the hearing instruments detect when the hearing aid wearer is walking. The motion of the wearer directly impacts the need for a stronger or weaker directional and noise reduction system. For example, a stronger processing for a non-motion scenario, as opposed to a relaxed system in the case of a wearer in motion requiring more environmental awareness.

This multi-dimensional situation analysis accurately identifies the acoustic environment and helps the hearing aid wearer to better hear and focus on target speech while competing signals are suppressed.7 YourSound technology determines the appropriate strength of Stationary Noise Reduction, the activation and strength of Adaptive Directional microphones, activation of NarrowDirectionality, and the activation of Directional Speech Enhancement, therefore maintaining all of the necessary information for the listening situation.

YourSound technology brings distinct benefits to the wearer for any listening situation. All the detection features previously listed are incorporated to more accurately identify any listening situation. When the directional and noise reduction characteristics are configured for the unique situation, the wearer can appreciate the benefits of Signia Xperience.

Transparent: With Signia Xperience, use of the directional microphones and noise reduction follows the principle of using as much as necessary and as little as possible. This approach provides more access to sounds in the environment for improved situational awareness without compromising on speech intelligibility.

Un-compromised speech intelligibility: Regardless of the complexity of the situation, directional processing and noise reduction continue the tradition of previous generations of hearing instruments to optimize speech intelligibility and reduce listening effort.8 And with OVP, more closed fittings are possible without the negative effects on acceptance, enhancing many of the benefits these features provide with respect to speech intelligibility.6

Better localization: Utilizing Ultra HD e2e, the hearing aid processing for noise reduction and directionality between left and right devices is synchronized, maintaining stable localization of sound sources even if they are attenuated. This goes beyond previous generations by also ensuring audibility of sounds especially at the sides of the wearer and keeping the sense of direction for any sound source.

Natural sound: Listeners can easily focus on conversations while remaining immersed in the acoustic scene, reducing any confusions about the surrounding listening environment. The goal of YourSound technology is to optimize intelligibility and situational awareness.

Thinking about how Xperience adapts to ever changing environments and needs, here is a real-world example for a hearing aid user who we will call Jack.

Jack is at an outdoor café in the city. This environment includes nearby traffic noise, other patrons talking, wait staff moving about, dishes clattering, and other general noise. As Jack sits with his speech partner, Xperience will analyze the noise floor, recognize the speech partner is sitting across the table, and understand there is a negative SNR – i.e. it is by all standards a difficult listening situation. Dynamic Soundscape Processing will adapt to help Jack hear his partner using the directional microphones and noise reduction. When Jack speaks, Xperience will detect his voice and Own Voice Processing will adjust so his voice sounds natural, and will immediately return to the appropriate gain for his speech partner when she speaks. The Ambient Modulation feature will detect the difference between the road noise and the activity around him, and help him maintain the perception of distance of the road without distortions. If the people at the next table decide to leave or start moving around, this will further degrade the SNR – consequently the directionality will increase. After the neighboring table has vacated, the waiter may come up behind Jack to ask if he needs another drink. The Front/Back detection now identifies a speaker behind him and relax the directionality so audibility improves for that speaker. Jack can even check with his primary speech partner to see if she wants anything else even before turning around to face the waiter, as he is much more aware. After the waiter leaves, stronger directionality is engaged while Jack picks up the conversation with his primary speech partner again. If there is speech at the table behind his speech partner, the Distance analysis will note that they are farther away, even though they are within the directional microphone beam. Dynamic Soundscape Processing will help maintain his perception of the distance differences so he can still best attend to his speech partner. As Jack and his speech partner decide to leave, they get up; now instead of being across from one another, they are side by side. The acoustic scene has not changed, but Jack’s listening needs have. As he walks, the directionality will relax, allowing him to hear his speech partner next to him as they walk and talk, headed to their next location.

With more traditional technologies that depend on discrete classification categories, the hearing aid would analyze the noise floor and the presence of speech and determine a speech in noise situation to apply directionality. That is all. Signia Xperience with Dynamic Soundscape Processing, on the other hand, allows the hearing aid user to hear what matters to him or her.

Dr. Matthias Froehlich is global audiology strategy expert for WS Audiology in Erlangen Germany. He is responsible for the definition and validation of the audiological benefit of new hearing instrument platforms. Dr. Froehlich joined WS Audiology (then Siemens Audiology Group) in 2002, holding various positions in R&D, Product Management, and Marketing since then. He received his Ph.D. in Physics from Goettingen University, Germany.