Directionality Assessment of Adaptive Binaural Beamforming with Noise Suppression in Hearing Aids

By Marc Aubreville and Stefan Petrausch

1. Introduction

Todays hearing aids make use of adaptive spatial filtering techniques that exploit the temporal and spatial sparsity of most listening environments [1]. These approaches include adaptive beam forming [2] as well as spectral subtraction [1]. These are applied both monaurally [3] (using a combination of front- and rear facing patterns) as well as binaurally (using a combination of ispi- and contralateral signals) [4]. These approaches lead to substantial improvements in subjective noise reduction [3], however they perform best in non-diffuse and non-stationary environments. Real environments are always a mixture of diffuse and directional sources, and of stationary and nonstationary signals. Speech signals are nonstationary and directed, which makes them a good candidate for said techniques. Interfering speech is typically also very distracting, because of its similar temporal and frequency characteristics to competing target speech.

Measurement of spatial characteristics of directional processing techniques is usually done under conditions that do not reflect the actual use case. They are performed e.g. in anechoic chambers, without any target signal, often using pure noise signals as interferer, or even under free-field conditions. In order to find a measurement method that reflects the hearing impression for those advanced algorithms, one should try to use measurement conditions that are much closer to the real environment. This was the motivation for the development of the approach which we will describe in this paper.

2. Superposition Approach

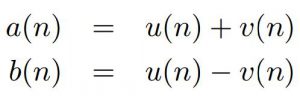

Hagerman and Oluffson presented an approach to investigate the effect of noise reduction in hearing aids, using a superposition of a target signal with a noise signal [5]. Two signals are presented to the hearing aid in sequential order. Let the signals be denoted a(n) and b(n), we have:

where u(n) and v(n) are uncorrelated. With ar(n) and br(n) denoting the recorded signals, we expect

where ur(n) and vr(n) are the respective signal components corresponding to the input signals u(n) and v(n), and e1(n) and e2(n) denote the non-linear signal alterations which should be considered as error signals for our signal separation approach.

In order to achieve reproducible results, we expect the hearing aids to be in the same initial condition for both input signal playbacks. We expect furthermore, that the phase differences in the input signal will produce corresponding phase differences in the output signal, and that the phase is not altered by further signal processing algorithms, for example feedback cancellation techniques[6]. We further rely on equal behavior in terms of other time-variant processes in the hearing aid, like noise reduction or classification-based situation recognition. Assuming these time-variant processes to be significantly slower than the signal components and assuming a linear operation point of the hearing aids, we can neglect the error signals e1/2(n) ≈ 0 and can estimate the signal components as

Hagerman originally used this method to evaluate the performance of noise reduction schemes in hearing aids [5]. Wu and Bentler used a similar approach to measure directivity of hearing aids with adaptive beamforming schemes [7]. In their work, they played back the two signals from two speakers, one with fixed position to the rotating hearing aid (the jammer signal) and one fixed to the room (the probe signal). In their setup, the main task was to alter the directional pattern as least as possible, thus they used a much lower probe signal than the jammer signal, and in later works even impulses as a probe [8].

Contrary to their target, however, we want to evaluate the perceived effect of all adaptive algorithms such as adaptive beam formers and Wiener-filter based signal enhancement techniques. We want to estimate in a real-world condition the signal enhancement that real hearing aids provide. In technical terms, this is covered best by the influence on

- The target signal ur(n, φu), originating from a defined direction of focus, e.g. directly frontal φu = 0◦.

- The interfering signal vr(n, φv), originating from other directions.

As it is common for directivity evaluation in hearing aids to show attenuation over angle (in polar plots), we propose to measure the ratio of target and interferer signal for each interferer angle φv separately. Using the DFT for time to frequency transformation with n → f, we define the Interferer To Target Ratio (ITR) as:

3. Measurement Setup

3.1. Setup and Procedure

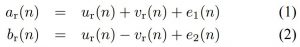

For the experiment, nine Genelec 8030 speakers with equal settings and calibration were used, all located in a lowreverberant lab room. The devices under test were placed on a KEMAR manikin, that was placed on a rotor that can be automatically controlled using a USB interface. The speakers were connected to a 8-channel-RME Hammerfall DSP Multiface card, the 9th channel being the headphone jack. In the first run, the target signal is always played back from the speaker at 0 ◦ (see Figure 1). The interfering signal direction changes sequentially, using all eight speakers located at the multiples of 45◦ . For the second run, the KEMAR is rotated to 22.5 ◦ and the target signal is played back from the speaker at 22.5 ◦ . Subsequently, the whole procedure is repeated. This way, we get M = 16 measurements, corresponding to all angles in 22.5 ◦ steps.

Fig. 1. Measurement setup as applied here. The loudspeaker at 22.5◦ was used to extend the angular resolution.

3.2. Choice of Stimulus Signal

For directionality measurements, two kinds of signals are commonly used: Sine sweeps are good for detecting nonlinearities, while noise signals are more robust to interferences. Wu and Bentler used white noise for both probe and jammer signals. This has the advantage of driving the polar pattern at all frequencies simultaneously. These stationary signals, however, are not capable of reflecting the performance of quickly adapting spatial processing schemes and might trigger stationary noise reduction, even for the target signal. Furthermore, there are speech-like signals, which mimic temporal and frequency behavior of speech [9]. However, since hearing aids are optimized to real speech signals, we found a real speech signal to be most accurate for this task. The international speech test signal (ISTS) [10] contains speech of many languages, and is thus a good candidate for this task. The ISTS signal has frequency components up to 16 kHz, and above 4 kHz even more power than the ICRA 5 noise [10]. Furthermore, since many hearing aids provide an omnidirectional characteristic in situations without background noise, i.e. in situations where a noise floor is very low, we added low-level stationary pink noise to both speech signals, in order to activate directional processing at all.

3.3. Devices and Settings

For evaluation three different high-end devices from three different manufactures were chosen. Two of them provided binaural signal processing based on bidirectional wireless audio signal transmission. For comparison we decided to check three different directional modes just as they are proposed by the fitting software of the manufacturers:

- Omnidirectional for calm environments,

- Monaural Directivity for noisy conditions,

- Binaural Directivity for noisy conditions.

In order to fulfill the assumptions from Section 2 and to result in a good approximation of interferer and target signal according to the equations 3 and 4, we disabled any means of feedback cancellation. The gain settings were taken as proposed for a flat hearing loss, however we checked, that the overall output level would not result in any saturation or clipping. Furthermore we disabled the dynamic compression as far as possible to achieve a linear behavior of the hearing aids. Anything else was left unchanged as proposed by the respective manufacturers.

3.4. Accuracy and Initialization

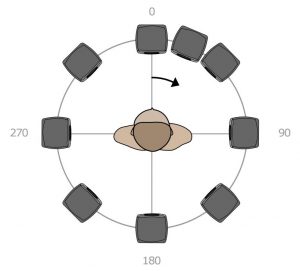

Despite the linearized settings, it was still possible to observe non-linear signal alterations e1/2(n) in speech pauses, especially for the binaural mode of Device A. We therefore decided to add an initial test-run without target signal u(n) in order to evaluate the performance of the superposition approach. This way we were able to monitor both, the time until the hearing aids were in a stable (initial) state for repeated playback, as well as the amount of remaining non-linear signal alterations. With u(n) ≡ 0 we expect ur(n) ≡ 0. Thus, using equation 1 and 2 we can estimate the relative measurement error to be

In order to achieve a good measurement accuracy, we introduced an onset time tonset for all measurement runs and evaluated the total measurement accuracy µ as the mean power square value of the current measurement accuracy in between this onset time and the measurement end for φ = 0◦ .

Fig. 2. Estimation of the relative measurement error as of equation 6 for 0◦ over time for the three different devices. The mean values µ were calculated from tonset = 20 s to the signal end and will indicate the measurement accuracy hereinafter.

4. Measurement Results

4.1. Behavior of the Target Signal

One major benefit of the method proposed here is, that any undesired alterations of the target signal can be checked too. This was done as depicted in Figure 3 at f = 1kHz by plotting the 0 ◦ target signal level as a function of the interferer angle φv. There is a slight tendency towards more target signal alterations while suppressing the interferer for more complex directional modes, but all deviations are below 0.8 dB.

Fig. 3. Normalized level of the 0◦ target signal at f = 1kHz for the devices and directional modes proposed in Section 3.3. The circular shape gives a good indication, that the target is not affected while the simultaneous interferer is attenuated.

4.2. Interference to Target Ratio

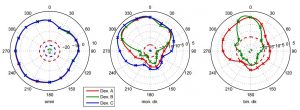

The most interesting result is the attenuation of the interferer signal compared to the target signal, as depicted in Figure 4 in form of the ITR introduced in equation 5. And due to the method and signals chosen here, this is not only a technical measurement, but also a true use-case, which reflects the effect perceived by the hearing aid wearer.

Fig. 4. Corresponding interferer to target ratios (ITR) for the devices and modes from Figure 3 for f = 1kHz. Solid line is the ITR measurement, dashed line is the accuracy limit µ as measured according to Figure 2.

Figure 4 also shows the limitations of this approach. The binaural directivity measurements of Device A around 210 degrees are in the order of magnitude of the estimated measurement error µ. However, as µ was estimated for the worst case situation of 0◦ the measurement can still be considered plausible.

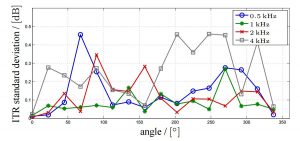

4.3. Repeatability

We performed 20 consecutive measurements of the same condition in order to evaluate the repeatability. As shown in Figure 5, standard deviations were small, mean over all channels and angles was 0.15 dB. The results are in a order comparable to that found by Wu and Bentler [8].

Fig. 5. Standard deviation of N = 20 consecutive ITR measurements of Device B in monaural directional mode. Maximum deviations from the mean values was below 1dB.

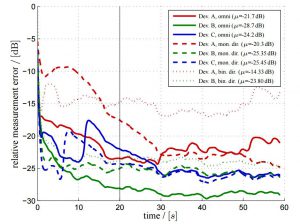

4.4. Comparison to other Approaches

In order to justify the extended effort spent for the proposed method, we compared the results to simpler approaches. In detail, we skipped the target speaker and tested two signal types for the interferer: the proposed ISTS speech signal and pure noise with identical spectral shaping.

Fig. 6. Level of the interferer signal for Device A and B in monaural directivity at f = 1kHz for three different measurement modes. The first as proposed here with simultaneous target and interfering speech. The second and the third with interfering speech resp. noise only.

The resulting level of the interfering signal for the different measurement methods is depicted in Figure 6. One can clearly see significant differences of the devices behavior. When there is no target signal, the devices apply more noise reduction for signals coming from the rear. Furthermore, both devices clearly separate in-between speech signals and stationary noise, via an additional overall attenuation. As the proposed method is close to real use-cases we can expect it to be the best match to the perceived effect for hearing aid users.

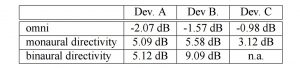

Table 1. Articulation based sequential directivity index (sAIDI) for the devices and modes measured in Figure 4.

5. Construction of a Directivity Index

The so called Directivity Index (DI) is a well-established standard for evaluation of directionality in hearing aids [11]. The ITR achieved with this method closely resembles the data required for the DI. However, for the DI it is assumed, that the measurements for the distinct directions can be linearly summed up, requiring effectively a fully spatially diffuse scenario. Contrary to this prerequisite, adaptive spatial processing schemes in modern hearing aids are utilizing the spatialtemporal sparseness of sound fields – in our case resembled by a sequential presentation of interfering signals.

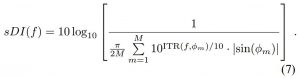

Yet the effects achieved by current algorithms yield an increase in speech intelligibility [12]. The method presented here tries to account for these benefits. We propose to introduce the so called sequential Directivity Index (sDI) analogue to the definition of the DI as

The DI is commonly weighted using the articulation index (AI), to calculate a broadband figure indicating the impact of the directivity on speech signals (see [11]). Using the same definition for the sequential DI, we result in the sequential AI DI, which gives a good overview of the directivity of the measured modes as it can be seen in Table 1.

6. Summary

In this paper we presented a new approach for reliably assessing the directivity of state of the art binaural hearing aids. The proposed method can handle the measurement objects as black boxes and does not rely on any assumed internal functionality, as it resembles closely a valid use-case scenario for any hearing aid. We introduced means to automatically evaluate the measurement performance in order to avoid missleading conclusions. The method may also be very useful for objective evaluations of both, directional processing and noise reduction. The sAIDI provides an easy way to compare the different approaches.

7. References

[1] V. Hamacher, J. Chalupper, J. Eggers, E. Fischer, U. Kornagel, H. Puder, and U. Rass, “Signal processing in high-end hearing aids: State of the art, challenges, and future trends,” EURASIP J. Appl. Signal Process., vol. 2005, pp. 2915–2929, Jan. 2005.

[2] Gary W. Elko and Anh-Tho Nguyen Pong, “A simple adaptive first-order differential microphone,” in Applications of Signal Processing to Audio and Acoustics, 1995., IEEE ASSP Workshop on. IEEE, 1995, pp. 169– 172.

[3] Henning Puder, Eghart Fischer, and Jens Hain, “Optimized directional processing in hearing aids with integrated spatial noise reduction,” in Acoustic Signal Enhancement; Proceedings of IWAENC 2012; International Workshop on. VDE, 2012, pp. 1–4.

[4] A Homayoun Kamkar-Parsi and Martin Bouchard, “Instantaneous binaural target psd estimation for hearing aid noise reduction in complex acoustic environments,” IEEE Transactions on Instrumentation and Measurement, vol. 60, no. 4, pp. 1141–1154, 2011.

[5] Bjorn Hagerman and ¨ Ake Olofsson, “A method to measure the effect of noise reduction algorithms using simultaneous speech and noise,” Acta Acustica united with Acustica, vol. 90, no. 2, pp. 356–361, 2004.

[6] Volkmar Hamacher and Ulrich Kornagel, “Method and device for reducing the feedback in acoustic systems,” , no. EP 1648197 B1, 2006.

[7] Yu-Hsiang Wu and Ruth A. Bentler, “Using a signal cancellation technique to assess adaptive directivity of hearing aidsa),” The Journal of the Acoustical Society of America, vol. 122, no. 1, pp. 496–511, 2007.

[8] Yu-Hsiang Wu and Ruth A. Bentler, “Using a signal cancellation technique involving impulse response to assess directivity of hearing aids,” The Journal of the Acoustical Society of America, vol. 126, no. 6, pp. 3214– 3226, 2009.

[9] Hugo Fastl, “A background noise for speech audiometry,” Audiological Acoustics, vol. 26, pp. 2–13, 1987.

[10] Inga Holube, Stefan Fredelake, Marcel Vlaming, and Birger Kollmeier, “Development and analysis of an international speech test signal (ISTS),” International Journal of Audiology, vol. 49, no. 12, pp. 891–903, 2010, PMID: 21070124.

[11] American National Standards Institute, “American national standard ANSI S3.35-2004: Method of measurement of performance characteristics of hearing aids under simulated real-ear working conditions,” 2004.

[12] Thomas A. Powers and Matthias Frohlich, “Clinical results with a new wireless binaural directional hearing system,” The Hearing Review, vol. 21, no. 11, pp. 32– 34, 2014.

Aubreville, M., & Petrausch, S. (2015). Directionality assessment of adaptive binaural beamforming with noise suppression in hearing aids (pp. 211–215). Presented at the ICASSP 2015 – 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE. http://doi.org/10.1109/ICASSP.2015.7177962