3D Classifier

Aaron Jacobs, M.Aud B.Eng (Hons)

This abundant data feed requires a high performance control system to make the correct decisions (brake, accelerate, coast, steering direction) at the correct time. State of the art hearing aids also use multi-dimensional inputs, where the wearer’s motion state and voice activity complement conventional acoustic information. This information is fed in to an intelligent classification system which allows the hearing aid to seamlessly adapt to the wearer’s communication and listening needs, by using a richer data set than an acoustic-only classifier. 3D Classifier captures three different aspects of the wearer’s communication needs:

Acoustic information

3D Classifier identifies six acoustic states for an accurate and representative classification of the wearer’s environment. For more information on the technical trade-offs associated with acoustic classification, see the sidebar.

Own voice activity

The wearer’s voice activity provides 3D Classifier with important information about the wearer’s amplification needs. In turn, 3D Classifier contributes to steering the Own Voice Processing algorithm, which provides benefits to the wearer by improving the amplified perception of their own voice.

Motion state

Motion information from the wearer’s smart phone adds contextual information about the wearer’s communication needs. For example, if the wearer is moving through their environment, it is likely that a more immersive experience (or higher emphasis on a multi-directional sound experience) will be beneficial.

Twenty-four detection states for unprecedented control

Acoustic classification is the foundation of a hearing aid’s classification system, but acoustic information alone cannot predict all aspects of the wearer’s communication and listening needs. Further, classification systems typically use a limited number of discrete detection states to avoid a high rate of detection error. Using additional detection dimensions (such as own-voice activity and motion detection) yields a higher number of potential detection states, while maintaining the accuracy advantage of limiting the number of acoustic detection states. Using this approach, 3D Classifier combines the input from the classification dimensions above with six distinct listening conditions, which then allows twenty-four distinct detection states. The wearer’s communication needs can be assessed with a greater degree of certainty than if only acoustic classification were used.

3D Classifier in action

After selecting one of twenty-four classification states, this information is used to steer various processing algorithms, such as Own Voice Processing, HD Spatial, directional processing, and noise reduction. The following illustrates the effectiveness and synergy of this advanced processing for five environments.

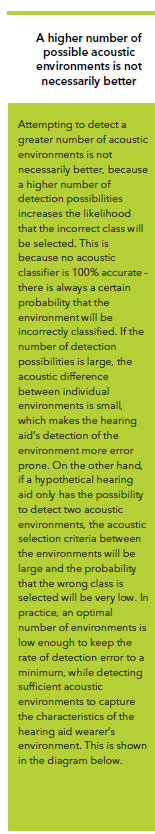

Example 1: Early breakfast outside

This situation features a low noise level and because the wearer is outdoors – minimal reverberation. 3D Classifier has detected a quiet acoustic environment, the wearer’s voice has not been detected at the instant shown in the illustration, and the hearing aid has identified that the wearer is not moving. The hearing aids are correspondingly configured for maximum spatial performance (that is, a setting which maximizes the wearer’s ability to locate the direction of sound sources and the distance to those sound sources). Due to the low noise level, binaural directionality is configured to a minimum level (ten percent, which aims to restore the natural directionality provided by the external ear which is lost when behind-the-ear hearing aids are worn, due to microphone placement). Other algorithms such as noise reduction are also reduced or deactivated.

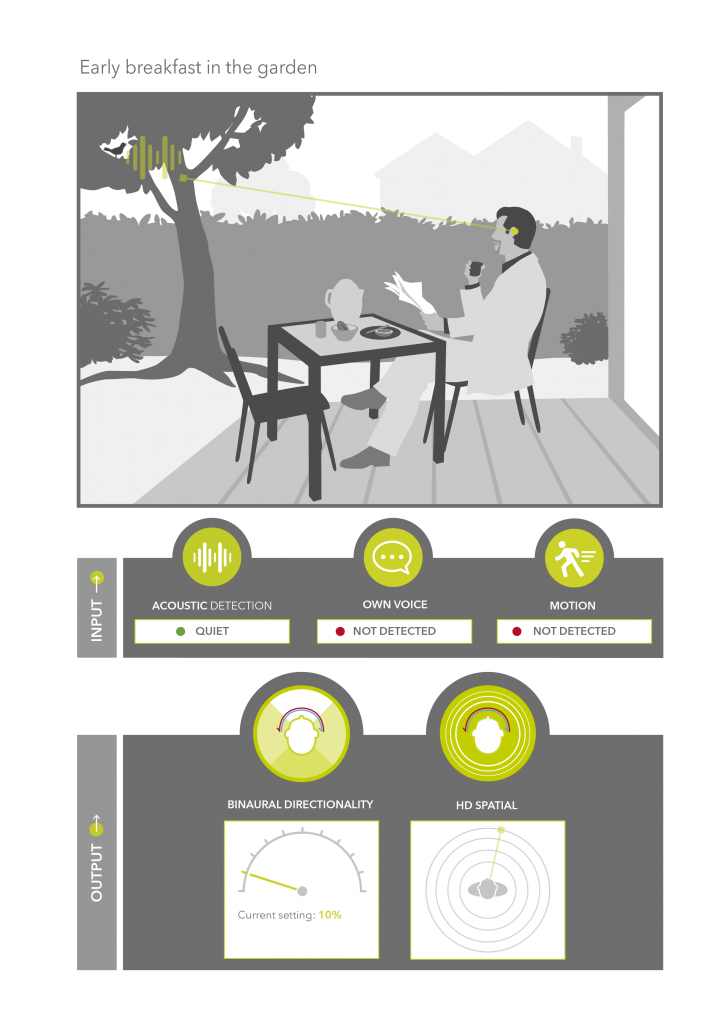

Example 2: Sitting with colleagues in the lunch room

This complex environment has multiple sound sources, moderate reverberation, and a medium to high noise level. Binaural directionality (80% strength) is activated to improve the signal to noise ratio of the wearer’s conversation partners. Unlike traditional directionality where directionality can lead to a less natural hearing experience, HD Spatial enhances key spatial cues which may be affected by directional processing. The wearer benefits from the advantage of binaural directionality in high noise environments1, while maintaining a natural sense of spatialness (in terms of the direction of sound sources and the perceived distance from those sound sources). Because a speech in noise environment has been detected, and because the wearer is stationary, HD Spatial is configured to provide proportionally more attention to near frontal sound sources, and less attention to sound sources behind the wearer. As the wearer converses with his colleagues seated at the table, 3D Classifier detects the wearer’s voice and triggers Own Voice Processing to adapt the gain levels in real time. As soon as the wearer ceases talking, the gain is instantly restored to a level appropriate for external voices. Own Voice Processing avoids the unnecessary loudness and reduction in sound quality which usually occurs when the required gain for external voices is applied to the wearer’s voice.2,3

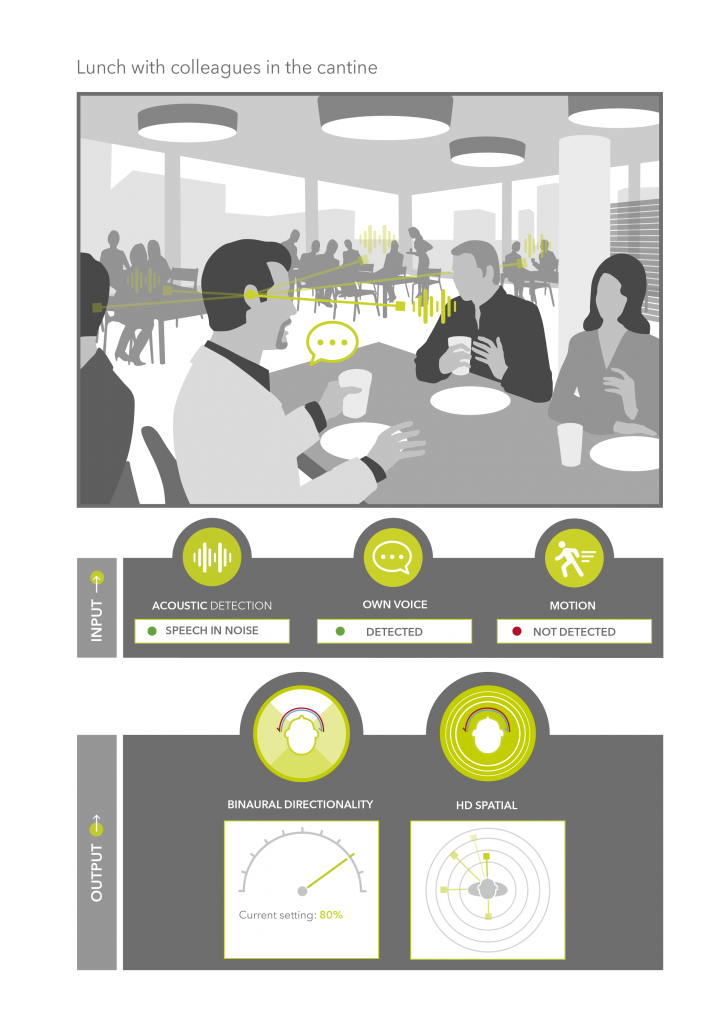

Example 3: Walking back to the car

This situation is less complex than the previous environment, because speech (from external voices and the wearer himself) is not present. Because of the passing traffic, the acoustic environment has been classified as noise, which in most hearing aids would likely trigger significant reduction of the signal via directional processing and/or noise reduction. However in this case, 3D Classifier has detected that the wearer is walking, which has triggered the hearing aid to remain in a full spatial mode (only 10 percent directionality). Therefore, as the wearer is about to cross the side street, he has full awareness of the car which has approached from behind and is about to turn the corner. In addition, he maintains awareness (regarding location and distance) of other major sound sources such as the approaching tram.

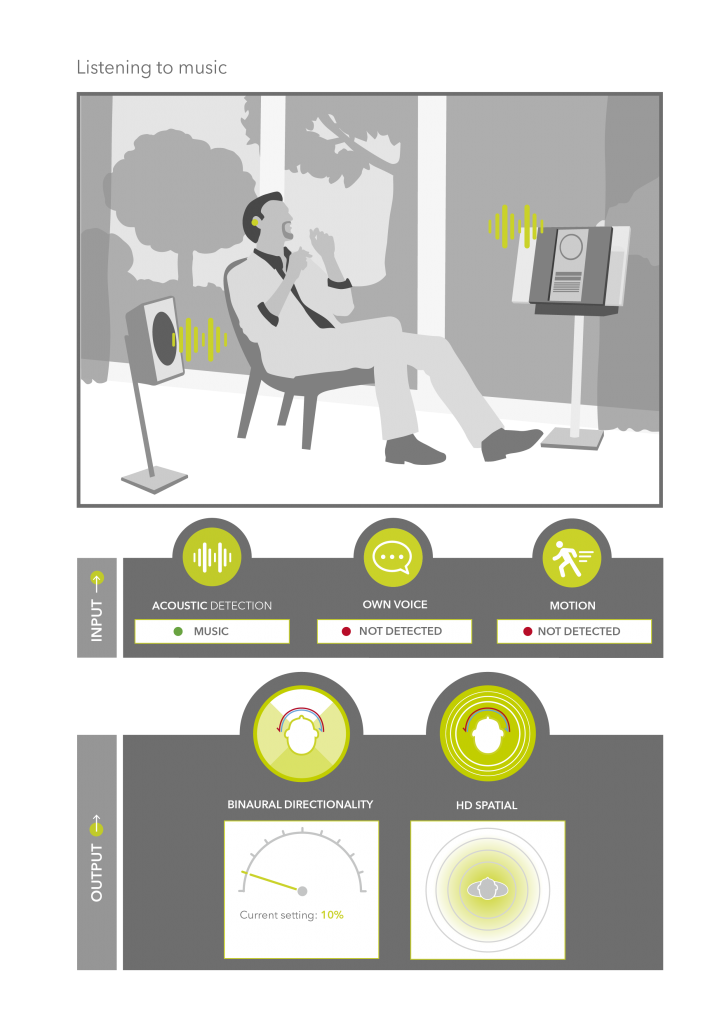

Example 4: Listening to music

The wearer has arrived home after work and has decided to listen to music. Even though hearing aids are designed primarily to enhance speech understanding, 3D Classifier provides automatic recognition of music and adapts the hearing aid’s response for enhanced music enjoyment by automatically applying a complex blend of adaptions to binaural directionality, noise reduction, feedback cancellation, and gain and compression settings.

Example 5: At the airport

The wearer and his wife have arrived at the airport and are looking for their check-in counter. Suddenly, an important announcement is made over the speaker system about their flight. Because 3D Classifier has detected a speech in noise environment (because of the speech activity of nearby people), the wearer’s voice has been detected (because at this moment he is talking with his wife), and motion has been detected (because the wearer is walking), the hearing aid is configured for a high level of situational awareness in all directions. Based on the wearer’s acoustic environment and his movement behavior, the hearing aid is suitably configured for the wearer to notice the beginning of the announcement, which would prompt him to stop talking and capture the information in the announcement. Nevertheless, at the point in time shown in the illustration, the hearing aid is applying some binaural directionality. This would instantly improve the signal to noise ratio of his wife’s voice if the wearer stops talking and his wife begins to talk. 3D Classifier has therefore used multiple inputs to arrive at an intelligent blend of directionality and spatial awareness which matches the communication needs of the wearer at that instant.

Conclusion

3D Classifier uses multi-dimensional inputs to precisely classify the environment and predict the wearer’s communication needs. The combination of traditional acoustic based classification, own voice detection, and motion detection creates twenty-four unique detection states. These detection states are used to intelligently steer various adaptive parameters such as Binaural Directionality and HD Spatial, among others. Binaural Directionality applies an adaptive strength algorithm, which uses the acoustic environment and the wearer’s behavior as an input. HD Spatial assists with the preservation of the location and distance of various sound sources. HD Spatial is also steered intelligently to maximize spatial performance in easier listening environments, while using settings which complement the use of strong binaural directionality in challenging environments, which optimizes conversations with the wearer’s communication partners.

1 Littmann V., Høydal E.H. Comparison study of speech recognition using binaural beamforming narrow directionality. Hearing Review. 2017;24(5) Available at: http://www.hearingreview.com/2017/05/comparison-study-speech-recognition-using-binaural-beamforming-narrow-directionality/

2 Powers T., Froehlich M., Branda E., Weber J. Clinical Study Shows Significant Benefit of Own Voice Processing. Hearing Review. 2018;23(4):30-34. Available at: http://www.hearingreview.com/2016/03/clinical-studies-show-advanced-hearing-aid-technology-reduces-listening-effort

3 Froehlich, M., & Powers, T.A. (2017, November). Sound quality as the key to user acceptance. AudiologyOnline, Article 21621. Retrieved from www.audiologyonline.com